API Gateway Patterns and Practices

An API gateway is a tool for consolidating and handling incoming client requests to different backends. It handles several requests, such as routing, rate limiting, authentication, and monitoring. An API gateway assists in developing an easily navigated relationship between the front-end application and back-end services. This gateway cannot be overemphasized, primarily because it addresses cross-cutting issues such as security, logging, and load balancing apart from acting as the main entry point through which the client end interacts with multiple backend services. This article will discuss what an API gateway does and various patterns and practices concerning microservices to make an architecture more effective.

Discover how at OpenReplay.com.

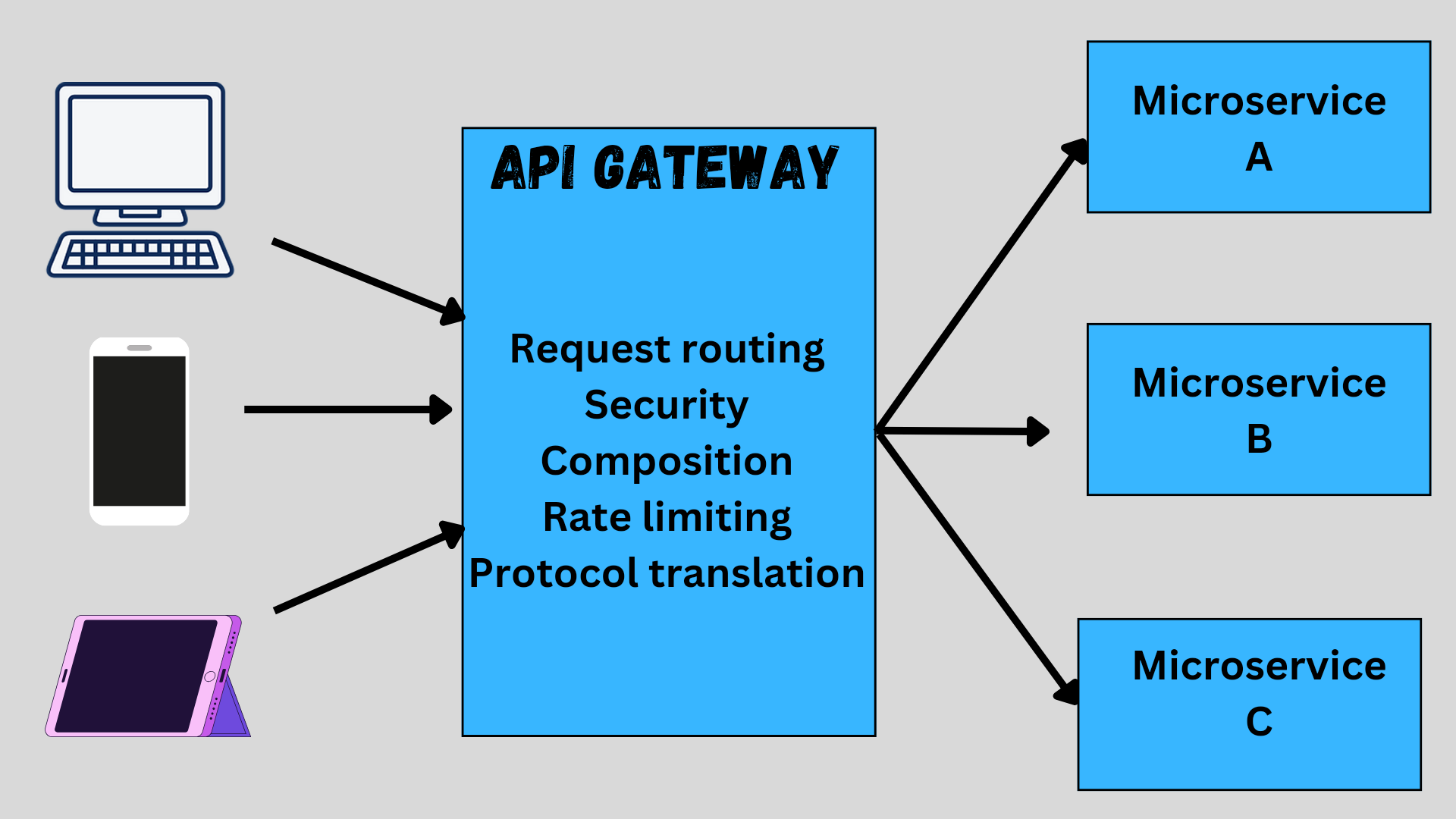

A gateway acts as a consolidation point where clients request pass-through to get to backend services. The image below explains the travel path of client requests through the gateway to the backend:

However, it is not restricted to providing request routing as it encompasses many possible functions, such as security, composition, rate limiting, etc. Let us analyze these functions and how they contribute to facilitating the interaction between clients and services.

Request Routing

This is an API gateway’s basic role in managing microservices-based architecture. API gateways analyze each request particulars from a client, including the path, the HTTP method in use, headers, and parameters. It does this examination to determine which backend services can respond to the client’s request. Thus, the gateways will work like an intelligent detector that only handles requests and does not inform clients of the system’s internal architecture. Due to this routing logic being central, the API gateway also reduces complexity, as a developer can make a few changes to the backend service locations without having to affect the client code. This aids client-side development and facilitates the system’s flexibility and scalability.

Request routing also has some other features like load balancing and failover. Load balancing in action aims to distribute the incoming requests across the different instances of a service. This assists in preventing the formation of bottlenecks since it stabilizes the traffic flow and equally distributes it. Regarding failover, if the service instance fails, then the API gateway reroutes requests to healthy instances. This feature assists in app development to foster the stability of an app as well as to give users a good interface.

Composition

This function is useful in a system where the client request requires data from several back-end services. An API gateway is used instead of the client making several requests and then blending the complex answers. Working in parallel with the components of an application, after receiving a request from a client, the gateway interacts with the necessary services to receive the data needed and, after acquiring the data, collects this data in a single response to the client. This process eliminates the round-tripping between the client and server, thus lowering the overhead and enhancing the client’s application performance.

Protocol Translation

This makes it possible for clients and services to negotiate even though different ones use different communication protocols. Services come in different forms, using protocols such as HTTP, WebSocket, gRPC, and so on. Thus, the API gateway links a client and the backend service, translating the client request into a form understood by the backend service and vice versa. This type of function is highly advantageous in systems that are always evolving, where one can deal with new services, which may use different protocols. This provides room for flexibility since the developers can introduce new technologies and protocols, and this does not have to change an existing client’s application.

Rate Limiting and Throttling

These assist in preventing the backend services from getting buried by traffic. Rate limiting is where the number of requests that a certain client can make is limited in a particular period. This is very important when it comes to optimized running or system availability. Throttling, in turn, controls traffic and, in the case of its density, introduces time delays within requests rather than denying them. Both of these features are important to ensure service availability during busy hours.

Security (Authentication and Authorization)

Authentication and authorization are major functions of API gateways. As is to be expected in any systems administration situation, security remains an issue of paramount importance. Due to its place in the software architecture to receive the first incoming requests from a client, the API gateway is well-placed to verify the identity of the client and whether the latter has any authorization to access the particular resource that has been requested. There is more than one authentication method; these methods include checking the client’s identity as certificates, API keys, or tokens. Authorization also has to do with the rights that the authenticated client has to perform some operations or access some data. The above serves to prevent unauthorized access, security misconfigurations, and even data leaks.

Monitoring and Logging

This aids in observing a system well to take proper care of it as well as identifying areas that need fixing when the need arises. This implies that complex performance and security auditing, as well as debugging, have a good trail that is generated as the API gateway logs every incoming request and outgoing response. Such logs assist you in how a system is used, the problem areas, and the source of these problems as they develop. Lastly, the API gateway can also embed monitoring systems that offer real-time updates on the performance of a system. You can have an early ticket when it comes to their system health depending on the visuals created from other metrics like the request rates, response times, errors, and traffic.

Why is an API Gateway Important for the Front End?

API gateways are useful as part of the solution for front-end development since they serve as a mediator between the front-end application and the microservices in the back end. Besides serving as a connector, they also enable performance optimization, increased security, and resilience. Let’s look at the importance of an API gateway more clearly.

Simplified Integration With Backend Services

An application built with the help of the microservice architecture implies a variety of services, each of which accomplishes a particular action. In case an API gateway isn’t implemented, a front-end developer will need to control various API endpoints. Each endpoint has its rate limits, authentication, and formats for passing data. This process can easily get out of hand and attract several errors if the number of services is large. An API gateway solves this by putting all these services in a single entry point, and developers can now use a unified API. This alleviates the burden on the developer from having to concern himself with such issues as protocol disparity or load balancing.

Improves User Experience Through Optimized Performance

In terms of user experience, performance is everything, and an API gateway assists in determining just how effectively data is handed over from the front end. It ensures that request processing is speedy due to caching and load balancing functions. Caching is useful in that it can store data that is usually requested several times, hence minimizing the frequent calls to the backend services. Load balancing distributes the incoming requests between the available service instances and hence holds the chance of developing a bottleneck for a specific instance. This goes a long way in reducing the delay as a client receives their timely responses.

Enhanced Security Without Extra Overhead

Here, we have a better security situation without introducing new variables, which could complicate the system. Since API gateways embrace authentication and authorization as the core tasks, they guarantee that every request is vetted as stringently as possible before they are routed to the backend services. As said, the API gateway can implement rate limiting and help resist several threats and abuse to your system. When kept at the gateway level, these security functions mean there is no need to try to employ and stabilize other security settings.

Better Error Handling and Resilience

The technique also contributes to increasing the front end’s tolerance for errors and robustness despite service interruptions. API gateways, for example, can handle a failed backend service in several ways, including redirecting the requests to other services or sending cached responses. This assists in ensuring that the front end stays running with minor bugs that would have impacted the user. On top of that, the user or front end is assisted by active API gateways in matters concerning failure by offering detailed error messages to enable the front end to respond accordingly to the user. For instance, the gateway may give a specific message that a certain service is not available, asking a user to try again after some time.

Common API Gateway Patterns

API gateways may use particular patterns to interconnect with the right approaches to processing a particular client’s request. All these patterns are intended to solve different problems, and a clearer insight into these patterns will assist you in making suitable decisions for your system. Let’s have a look at some of the common patterns.

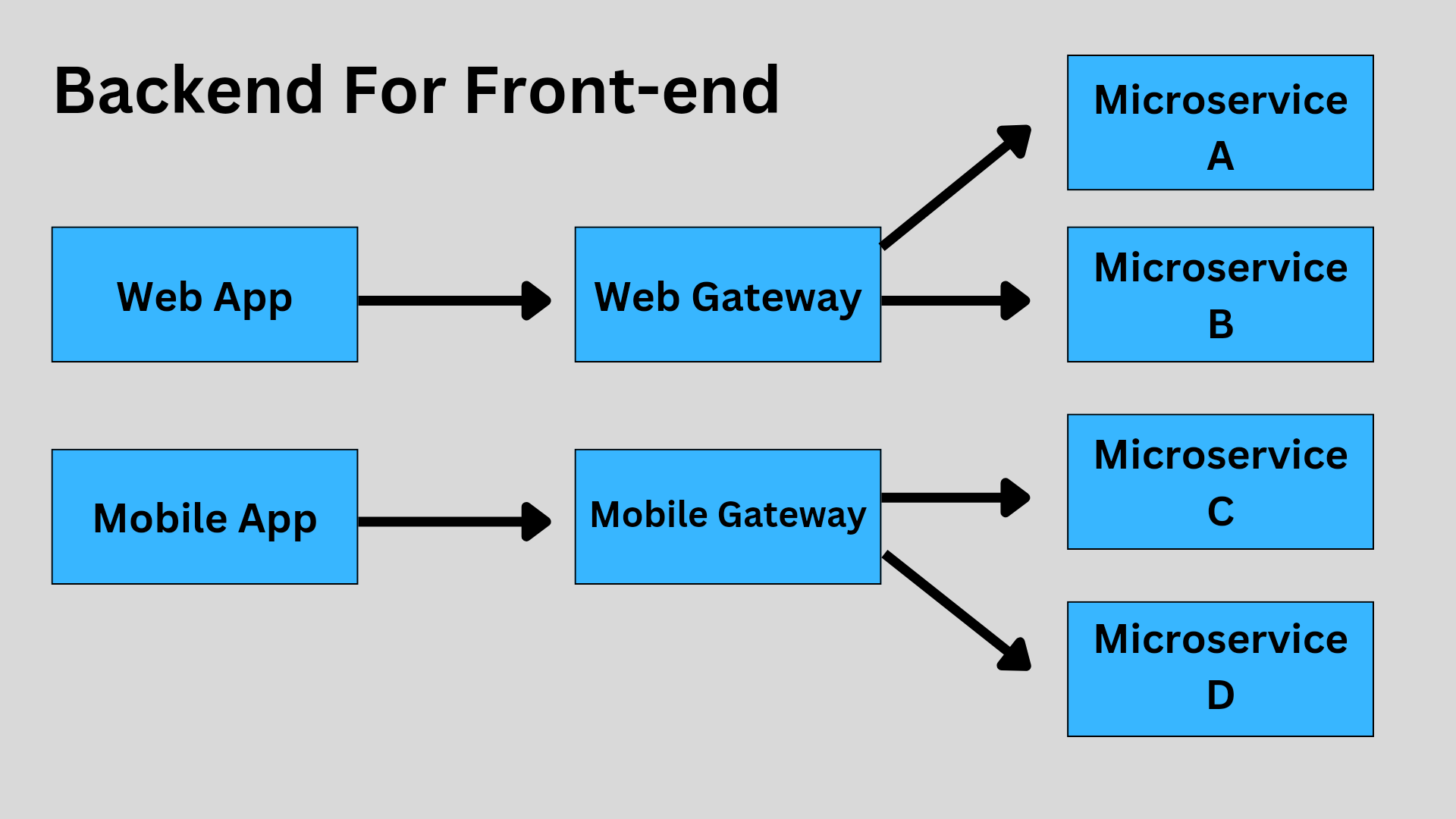

Backends For Front-ends (BFF)

The backends for front-ends (BFF) pattern is intended to solve the needs of diverse client-based applications like web, mobile, and desktop. Here, the gateway is customized according to the client type and then offers a backend capable of managing data in the proper manner for the particular client. This flow should be something like the below:

By this pattern, the developers can be assured that every client has what they require without complicated matters. This approach cuts down on the process from a client’s side and minimizes the client’s dialogue with the backend. Here’s a simple code example in Express.js:

// Express.js example for a BFF pattern

const express = require("express");

const app = express();

const port = 3000;

// Mobile Backend

app.get("/mobile/user", (req, res) => {

// Fetch and process data specifically for mobile users

res.json({ id: 1, name: "John Doe", mobileOptimized: true });

});

// Web Backend

app.get("/web/user", (req, res) => {

// Fetch and process data specifically for web users

res.json({

id: 1,

name: "John Doe",

mobileOptimized: false,

additionalData: "More data for web",

});

});

app.listen(port, () => console.log(`BFF service running on port ${port}`));This results in the creation of two endpoints, mobile under /mobile/user and web under /web/user. Each endpoint returns data that is changed to meet the needs of a certain client.

API Gateway Aggregation

API gateway aggregation unifies data from many microservices into one response. Most of the time, in microservices, a single request from a client may require information from several services since each handles a particular segment of the application functionality. The gateway eliminates the need for the client to make repeated calls to each of the services; instead, the pattern aggregates all the data on the server’s end and supplies it in a single response. Here’s a code example in Express.js:

// Express.js example for API Gateway Aggregation

const express = require("express");

const axios = require("axios");

const app = express();

const port = 3000;

app.get("/aggregated-data", async (req, res) => {

try {

const userResponse = await axios.get("http://user-service/users/1");

const ordersResponse = await axios.get(

"http://order-service/orders/user/1"

);

// Combine data from different services

const aggregatedData = {

user: userResponse.data,

orders: ordersResponse.data,

};

res.json(aggregatedData);

} catch (error) {

res.status(500).send("Error fetching data");

}

});

app.listen(port, () => console.log(`API Gateway running on port ${port}`));The /aggregated-data endpoint retrieves data from a user-service and order data from an order-service and then produces a combined response.

Edge Functions

Edge functions improves the performance of API gateways using CDNs and edge computing distributed topology. Utilizing this pattern features such as request routing and caching, as well as security checks, are placed near the end-users within the edge of the network. Edge functions distribute load across multiple edge locations and are not centrally hosted on another server. Here’s a simple explanation in Javascript:

// Example using Cloudflare Workers as edge functions

addEventListener("fetch", (event) => {

event.respondWith(handleRequest(event.request));

});

async function handleRequest(request) {

const url = new URL(request.url);

// Simple routing logic

if (url.pathname === "/cached-data") {

// Respond with cached data

return new Response("Cached Response", { status: 200 });

} else {

// Fetch from origin server

return fetch(request);

}

}The above shows how edge functions can be deployed using Cloudflare Workers. The function deals with the request by providing the information from caches located at the edge available, thus decreasing the latency.

Circuit Breaker

This is a resilience pattern that is applied to protect available services from failure occurrences. This pattern assists in keeping a check on the well-being of a service, and when it begins to deteriorate or fails to respond, a circuit breaker steps in and prevents any further requests from being made. Here’s an example of how it prevents a tailoring service from continuing to receive traffic in Node.js:

// Simple Circuit Breaker example in Node.js

const CircuitBreaker = require("opossum");

// Service call wrapped in a circuit breaker

const fetchServiceData = () => axios.get("http://unreliable-service/data");

const breaker = new CircuitBreaker(fetchServiceData, {

timeout: 3000, // If service doesn't respond within 3 seconds, trip the circuit

errorThresholdPercentage: 50, // Trip the circuit if 50% of requests fail

resetTimeout: 5000, // Attempt to reset the circuit after 5 seconds

});

breaker.fallback(() => "Fallback data due to service failure");

breaker

.fire()

.then((response) => console.log(response.data))

.catch((error) => console.error("Service failed", error));The above utilizes opossum in the implementation of a circuit breaker between a call to a service. If the service is not up to the mark or if the response time is high, then a breaker trip and fallback command is initiated. This means that the chances of the application becoming vulnerable are kept at a minimum.

Best Practices for Implementing API Gateways

Before using API gateways, it is necessary to have some essential knowledge of several best practices for better performance. Some practices include scalability considerations, handling failures, security, etc.

Scalability Considerations

Problems with scalability are critical to address as it helps to keep up with optimal performance. The first concept of scaling is horizontal scaling, which is a primary practice for achieving scalability. Horizontal scaling involves deploying multiple instances of the API gateway across different servers. It then uses a load balancer to distribute incoming traffic evenly across these instances. Here’s an example in YAML for Kubernetes:

# Example Kubernetes deployment for horizontal scaling

apiVersion: apps/v1

kind: Deployment

metadata:

name: api-gateway

spec:

replicas: 3 # Number of API Gateway instances

selector:

matchLabels:

app: api-gateway

template:

metadata:

labels:

app: api-gateway

spec:

containers:

- name: api-gateway

image: api-gateway:latest

ports:

- containerPort: 8080The above example for Kubernetes provides information on how to scale an API gateway horizontally by creating three replicas. As the number of instances increases, traffic is shared among these replicas by the load balancer to avoid overloading any instance.

Security Best Practices

We have already discussed authentication and authorization and how it relates to security. They both aid in preventing intruders from accessing your API since only those users with permission will be allowed to access the APIs. Here’s a simple code explanation for this in Javascript’s Express.js:

// Express.js example enforcing JWT authentication

const express = require("express");

const jwt = require("jsonwebtoken");

const app = express();

const secretKey = "your_secret_key";

// Middleware to check JWT

function authenticateToken(req, res, next) {

const token = req.header("Authorization")?.split(" ")[1];

if (!token) return res.sendStatus(401);

jwt.verify(token, secretKey, (err, user) => {

if (err) return res.sendStatus(403);

req.user = user;

next();

});

}

// Protected route

app.get("/protected", authenticateToken, (req, res) => {

res.json({ message: "This is a protected route", user: req.user });

});

app.listen(3000, () => console.log("API Gateway running on port 3000"));In the above code, security for web tokens is done by implementing JSON Web Tokens for authentication. The authenticateToken middleware validates the token; this means that not every request can access specific routes without a valid token.

API Versioning

This is useful for updating new API implementations without affecting the existing API clients. When it comes to versioning, the gateway is capable of routing the request depending on the API version that is stated. As for the concept of versioning, it is required to maintain backward compatibility because this makes sure that your previous clients will still be operational. Here’s a simple code example of API versioning in Express.js (Javascript):

// Express.js example handling API versioning

const express = require("express");

const app = express();

app.get("/v1/resource", (req, res) => {

res.json({ data: "Response from API version 1" });

});

app.get("/v2/resource", (req, res) => {

res.json({ data: "Response from API version 2" });

});

app.listen(3000, () =>

console.log("API Gateway handling versioning on port 3000")

);This describes how one can implement a simple URI versioning wherein different routes are created for different API versions (/v1/resource and /v2/resource). This aids in maintaining backward compatibility, especially when newer versions are being developed.

Caching Strategies

This assists in alleviating the burden that is exerted on services, and at the same time, it ensures that the availability or the response time for clients is enhanced. The caching performed at the gateway level brings numerous advantages, and it can be done using an in-memory or distributed caching system. Here is an example in Express.js:

// Express.js example with simple in-memory caching

const express = require("express");

const cache = new Map();

const app = express();

app.get("/data", (req, res) => {

const cachedResponse = cache.get("data");

if (cachedResponse) {

return res.json(cachedResponse);

}

// Simulate data fetching

const data = { value: "Fresh data from backend service" };

cache.set("data", data); // Cache the response

res.json(data);

});

app.listen(3000, () =>

console.log("API Gateway with caching running on port 3000")

);The above example shows simple in-memory caching using a map. The API gateway checks whether there is a cache response and provides this response within a short period. If the response is not cached, new data is fetched and then cached for further usage.

Logging and Monitoring

This assists in maintaining the performance of an API gateway at an impressive rate. With extensive logging, it is possible to get extensive information about each request and response. This information is very important in problem-solving and traffic analysis. Here’s an example in Express.js:

// Express.js example with basic logging

const express = require("express");

const morgan = require("morgan");

const app = express();

// Use morgan middleware for logging

app.use(morgan("combined"));

app.get("/data", (req, res) => {

res.json({ value: "Data with logging" });

});

app.listen(3000, () =>

console.log("API Gateway with logging running on port 3000")

);The code example above uses the morgan middleware for HTTP request logging. The combined format provides data on every request, its method, URL, status, and the time taken to respond. These logs can be used if the need arises to check up on the performance of the gateway.

Conclusion

In this article, we have discussed several core facets of API gateways, including what it is, why it is employed, patterns, and rules to adhere to. If you can follow most of the guidelines given above, you will be able to implement API gateways in a way that will meet most of your needs while also leaving room for future changes and development. This flexibility paves the way for more long-term sustainability for your systems and web applications.