Create a Screen Recorder with JavaScript

Screen recording lets you capture your computer’s visual and audio activity, creating a video record of what is happening on your screen. It’s useful for tutorials, presentations, gameplay sessions, and software demonstrations. A screen recording application makes this process easy. It offers a simple interface for capturing and managing your recordings. This article will show you how to do this with JavaScript.

Discover how at OpenReplay.com.

How to create a screen recorder in JavaScript

In this article, we will build a simple screen recording application using JavaScript, leveraging the MediaStream and MediaStream Recording APIs. You will learn to capture, record, and download your screen recordings. Here is a preview of what we will be building:

Understanding the MediaStream and MediaStream Recording APIs

MediaStream and MediaStream Recording APIs are part of the web APIs provided by browsers. They enable developers to perform various tasks from within the browser. Check out the official documentation for a complete list of available web APIs.

MediaStream API

The MediaStream API provides the interfaces and methods for working with streams and their constituent tracks. One such interface is the mediaDevices interface, which provides access to connected audio and video sources like webcams, microphones, and screens through its getUserMedia and getDisplayMedia methods.

The getUserMedia and getDisplayMedia methods access audio and video streams from a user’s device when the user grants permission. The difference between the two methods is in the source of their streams. The getUserMedia method accesses streams from user-facing media inputs like webcams or microphones. Meanwhile, the getDisplayMedia method accesses video and audio streams from the user’s device.

MediaStream Recorder API

The MediaStream Recording API makes it possible to record the data generated by a MediaStream. The mediaStream represents a stream of media content. The mediaStream Recording API provides a single mediaRecorder interface for recording media. To control recording, the mediaRecorder uses events like start, pause, resume, and stop. It also has an ondataavailable event. This event triggers a BlobEvent, which creates a blob from the available data.

Setting up your Screen Recorder

Now that we have a basic understanding of the mediaStream and mediaStream Recording APIs, let’s use this knowledge to create a simple screen recording application.

Building the User Interface

The first step is to create a user interface for our application. To follow along, create a new project with three new files: index.html, style.css, and main.js in it. Next, copy and paste the below markup into the index.html file:

<p class="countdown" id="countdown"></p>

<div class="screen-recorder">

<h1>Capture your screen</h1>

<video controls preload="metadata" id="video"></video>

<div>

<button type="button" class="btn" id="btn">Start Recording</button>

<a href="" class="btn" id="link">download video</a>

</div>

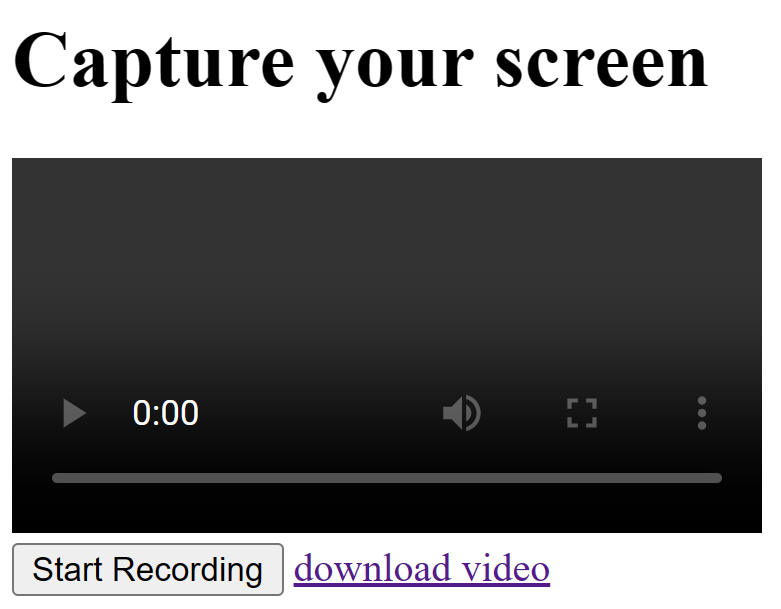

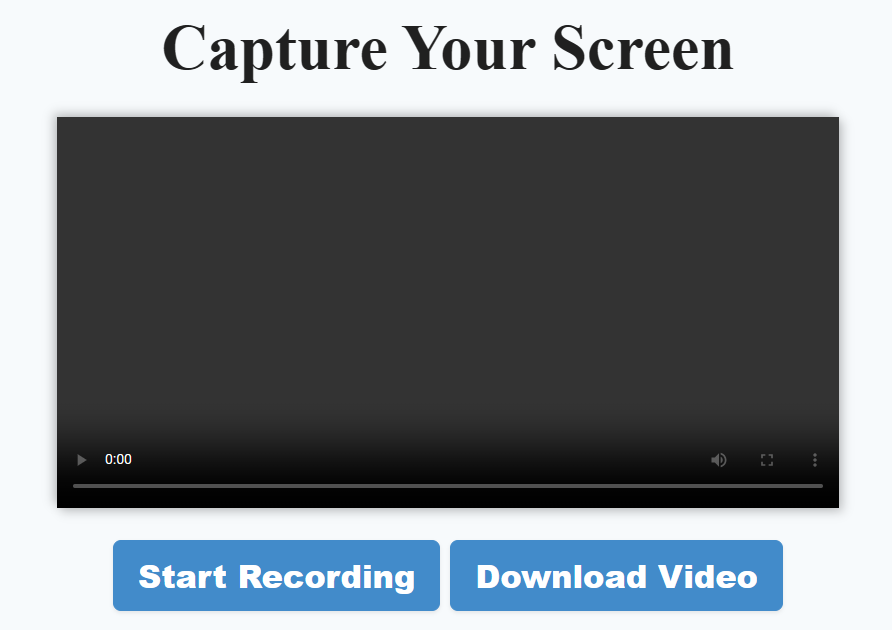

</div>This markup sets up the layout of our page. The video element will display the recorded video. The p element with a class of countdown, will display a countdown before the recording starts. You should see the below image in your browser when you save the file:

Next, copy and paste the below styles into your style.css file:

* {

padding: 0;

margin: 0;

box-sizing: border-box;

}

body {

width: 100vw;

min-height: 100vh;

position: relative;

display: grid;

place-items: center;

}

.countdown {

width: 100%;

height: 100%;

display: none;

place-items: center;

color: green;

font-size: 10rem;

font-weight: 900;

background-color: rgba(0, 0, 0, 0.5);

position: absolute;

inset: 0 0 0 0;

z-index: 10000;

}

.screen-recorder {

display: flex;

flex-direction: column;

align-items: center;

justify-content: center;

gap: 2rem;

width: 100%;

height: 100%;

background-color: #f7fafc;

color: #202020;

h1 {

text-transform: capitalize;

font-size: 4rem;

font-weight: bold;

color: #202020;

}

video {

width: 782px;

max-height: 440px;

box-shadow: 4px 4px 10px rgba(0, 0, 0, 0.2),

-4px -4px 10px rgba(0, 0, 0, 0.2);

}

}

.btn {

background-color: #428bca;

color: #fff;

font-weight: bold;

padding: 0.75rem 1.5rem;

border-radius: 0.5rem;

box-shadow: 0 0.125rem 0.25rem rgba(0, 0, 0, 0.08);

transition: background-color 0.3s ease;

border: 1px solid #428bca;

cursor: pointer;

text-transform: capitalize;

font-weight: 900;

&:hover {

background-color: #357ec7;

}

}

a {

text-decoration: none;

margin-inline-start: 20px;

}In the above stylesheet, we applied some basic CSS resets and then provided additional styling for the elements in our markup. You should see the below image in your browser when you save the file:

Accessing Screen and Audio Streams

Now that we have built our user interface, it is time to request access to the user’s media devices. First, let’s create new variables that will be reassigned later, and also obtain references to the button and anchor elements.

const startBtn = document.getElementById("btn");

const downloadLink = document.getElementById("link");

let blob = null;

let videoStream = null;Due to security reasons, we can only request access to a user’s media devices when they actively interact with our website, such as by clicking a button. Let’s proceed by adding a callback function that will be triggered when the user clicks on the startBtn:

startBtn.addEventListener("click", startScreenCapturing);Next, we will define the startScreenCapture function:

async function startScreenCapture() {

if (!navigator.mediaDevices.getDisplayMedia) {

return alert("Screen capturing not supported in your browser.");

}

try {

if (!videoStream?.active) {

videoStream = await navigator.mediaDevices.getDisplayMedia({

audio: true,

surfaceSwitching: "include",

});

const audioStream = await navigator.mediaDevices.getUserMedia({

audio: {

echoCancellation: true,

noiseSuppression: true,

sampleRate: 44100,

},

});

const audioTrack = audioStream.getTracks()[0];

videoStream.addTrack(audioTrack);

recordStream(videoStream);

} else {

throw new Error(

"There is an ongoing recording. Please, stop it before recording a new one"

);

}

} catch (error) {

console.error(error);

alert(error);

}

}The startScreenCapturing function initiates the screen-capturing process. First, it verifies browser compatibility by checking for the availability of the getDisplayMedia method. If supported and there are no active streams, it requests access to the user’s internal audio and screen streams through the getDisplayMedia method. The getUserMedia requests and provides access to the external audio. It then combines the audio and video streams by adding the audioTrack from the audio stream to the video stream, creating a single stream. Finally, it calls the recordStream function to manage the recording process. Any errors encountered during this process are logged and the user is alerted. The GIF below illustrates what happens when you save the file and click on the startBtn:

Recording the stream

Now that we have available streaming data, we will use the MediaStream Recording API to record these streams. Let’s define the recordStrem function that handles the recording:

function recordStream(stream) {

countDown();

const mediaRecorder = new MediaRecorder(stream, {

mimeType: "video/webm; codecs=vp8,opus",

});

const recordedChunks = [];

mediaRecorder.addEventListener("dataavailable", (e) =>

recordedChunks.push(e.data)

);

}The recordStream function calls the countdown function and creates a new MediaRecorder instance. This instance is configured to record the captured stream in Webm format with VP8 video and Opus audio codecs. Next, the recorderChunks array is created to hold the recorded data.

An event listener is attached to the mediaRecorder to listen for the ondataavailable event. This event is triggered when stream data becomes available. The stream data is then pushed into the recorderChunks array.

Next, let’s define the countdown function:

function countdown() {

const countDownElement = document.getElementById("countdown");

countDownElement.style.display = "grid";

let count = 3;

function reduceCount() {

countDownElement.textContent = count;

count--;

if (count >= 0) {

setTimeout(reduceCount, 1000);

} else {

countDownElement.style.display = "none";

}

}

reduceCount();

}The countdown function creates a visual countdown for 3 seconds before recording. It selects the HTML element with the class countdown and updates its text content with the remaining time each second. Once the countdown reaches zero, the element is hidden, allowing the user to know when the recording starts.

Managing the Recording

Having set up the recording, we need to start the mediaRecorder and the user should also be able to stop the recording. Let’s edit the recordStream function to include these functionalities:

function recordStream(stream) {

// Existing codes

// Stop recording and audio streaming when video streaming stops

stream.getVideoTracks()[0].addEventListener("ended", () => {

mediaRecorder.stop();

stream.getAudioTracks()[0].stop();

});

mediaRecorder.addEventListener("stop", () => {

createVideoBlob(recordedChunks);

showRecordedVideo(blob);

});

setTimeout(() => mediaRecorder.start(), 4000);

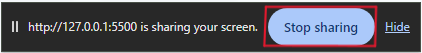

}In the above code, an event listener is attached to the video track of the ongoing stream being captured to detect when it ends. The event will be triggered when this button is pressed:

When this event occurs, it stops the recording and audio streaming. An event listener is also attached to the mediaRecorder to listen for the stop event. Once this event is triggered, it executes the createVideoBlob and showRecordedVideo functions. The mediaRecorder is then started after a delay of 4 seconds. This allows the countdown function to finish executing before the recording starts. The below GIF illustrates what should happen when you save the file and click the startBtn:

Displaying the recorded video

With the user’s screen now being captured and recorded, they should be able to see their video when they stop recording. To achieve this, let’s define the createVideoBlob and the showRecordedVideo functions:

function createVideoBlob(recordedChunks) {

blob = new Blob(recordedChunks, {

type: recordedChunks[0].type,

});

}

function showRecordedVideo() {

const video = document.getElementById("video");

video.src = URL.createObjectURL(blob);

calculateVideoDuration(video);

}In the createVideoBlob function, our global blob variable is reassigned to the newly created Blob object using the Blob() constructor. This Blob is constructed from the recordedChunks array, representing chunks of recorded video data. The constructor also includes an options object specifying the MIME type of the Blob, derived from the MIME type of the first recorded chunk in the array.

In the showRecordedVideo function, a reference to the video element is created. Its src attribute is then set to a URL created using the createObjectURL method of the URL interface, linking the recorded video data to the video element for playback. Now, when a user stops recording, it will be displayed in the video element:

Looking at the above GIF carefully, you will notice that the video has no duration before it starts playing. The duration is only shown when the video is already playing. This is because of a bug in browsers that sets an incorrect duration on the recorded media. To fix the error, we will define a new function calculateVideoDuration that sets the correct duration for us:

function calculateVideoDuration(videoElement) {

videoElement.addEventListener("loadedmetadata", () => {

if (videoElement.duration === Infinity) {

videoElement.currentTime = 1e101;

videoElement.addEventListener(

"timeupdate",

() => {

videoElement.currentTime = 0;

},

{ once: true }

);

}

});

}The calculateVideoDuration function sets the correct duration by setting up an event listener on the provided videoElement. When the video metadata is loaded, it triggers the loadedmetadata event, which executes a function that checks if the video duration is Infinity, indicating that the video duration could not be determined correctly. If so, it sets the currentTime to a larger value 1e101. When the browser attempts to set the currentTime to this large value, it recalculates the actual duration of the video. The subsequent timeupdate event ensures that this recalculated duration is captured and the current time is reset to the start of the video. This workaround ensures that the video’s duration property is updated correctly from Infinity to its actual duration.

Next, let us call the function in the showRecordedVideo function and pass the video element as its argument:

function showRecordedVideo() {

// Previous codes

calculateVideoDuration(video);

}Now, when we record a video, we should be able to see its correct duration:

Downloading the Recorded Media

To download the recorded media, we can use the createObjectURL method of the URL to create a temporary URL for the recorded Blob:

downloadLink.addEventListener("click", () => {

downloadLink.href = URL.createObjectURL(blob);

const fileName = prompt("What is the name of your video?");

downloadLink.download = `${fileName}.webm`;

downloadLink.type = "video/webm";

});This code handles clicking the download link for the recorded video. Clicking the download link, triggers the creation of a temporary URL for the video data stored in the blob. This URL allows the browser to access the video for download and is set as the href of the download link. The user is then prompted to enter a file name for the recorded video. The chosen name is combined with the .webm extension and assigned to the download link’s download attribute, to be used as the file name when downloaded. The link’s type is then set to video/webm to inform the browser of the file type. When you save the file and check your browser, everything should work as expected:

Conclusion

This article provided a basic understanding of MediaStream and MediaStream Recording web APIs and their components. It has effectively demonstrated how to capture screen and audio streams, manage recordings, download recordings, and address the common bug of incorrect duration display in recorded videos. While the example presented is simplified, the knowledge gained from this guide can serve as a strong foundation for building feature-rich screen recording applications.

Further Reading:

Understand every bug

Uncover frustrations, understand bugs and fix slowdowns like never before with OpenReplay — the open-source session replay tool for developers. Self-host it in minutes, and have complete control over your customer data. Check our GitHub repo and join the thousands of developers in our community.