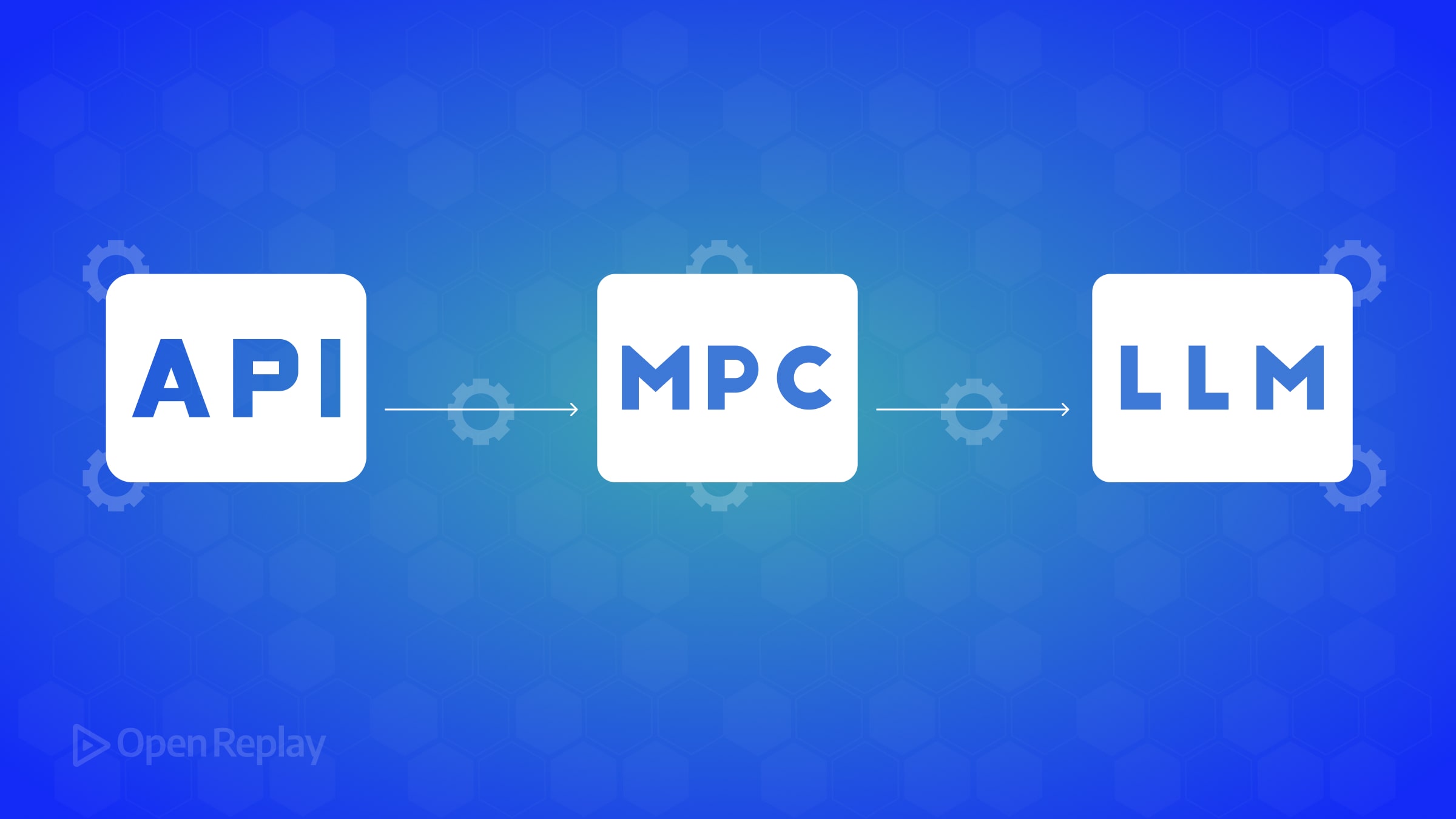

How to Expose Your Existing API to LLMs via MCP: A Comprehensive Guide

Integrating large language models (LLMs) with your existing API can be challenging. Traditionally, you’d need custom connectors or platform-specific plugins to enable AI access to your service. The Model Context Protocol (MCP) offers a better way.

What is the model context protocol (MCP)?

MCP is an open standard from Anthropic (now an open-source project) that standardizes how AI assistants connect to external data and tools. Think of MCP as a universal adapter—a “USB-C port” for AI applications. Instead of building one-off integrations for each model or platform, you expose your API once via MCP, and any MCP-enabled LLM can use it.

Key Benefits of MCP

- Standardization: Replace fragmented integrations with a single, consistent protocol

- Reusability: Build your API connector once as an MCP server, and multiple AI clients can leverage it

- Control: The MCP server runs in your environment, so you can enforce security and manage exactly what the model can access

MCP acts as a universal plugin system for LLMs, providing a structured way for an AI to call functions or retrieve data from external sources.

Understanding MCP primitives

MCP uses three main concepts to expose functionality:

- Resources: These are like read-only data endpoints. A resource is identified by a URI (e.g.,

todo://list) and provides data to the model. Resources are meant to load context into the model (like fetching a document, database record, or list of tasks). They’re similar to GET requests and don’t usually change state. - Tools: These are actions or operations the model can invoke—essentially functions with parameters and return values that the LLM can call. Tools can have side effects or perform calculations. Think of tools like “functions the AI can call.”

- Prompts: MCP allows defining reusable conversation templates or workflows. Prompts are like predefined conversation snippets that the server can provide to the client.

In summary: resources load data for the model, tools perform actions, and prompts guide interactions.

Implementation: Step-by-step guide

Let’s walk through exposing a simple REST API to an AI assistant using MCP.

Step 1: Set up a simple REST API example

We’ll use a basic to-do list API as our example. Here’s a minimal implementation using Flask:

from flask import Flask, request, jsonify

app = Flask(__name__)

tasks = [ {"id": 1, "title": "Sample Task", "done": False} ]

@app.route('/tasks', methods=['GET'])

def get_tasks():

# Return the full list of tasks

return jsonify(tasks)

@app.route('/tasks', methods=['POST'])

def create_task():

# Add a new task from the JSON request body

data = request.get_json()

new_task = {

"id": len(tasks) + 1,

"title": data.get("title"),

"done": False

}

tasks.append(new_task)

return jsonify(new_task), 201

# Run with: flask runThis API has two endpoints:

GET /tasks- Retrieve all tasksPOST /tasks- Add a new task

In a real scenario, this could be any existing API with a database backend.

Step 2: Create an MCP server to wrap the API

Now we’ll create an MCP server in Python that exposes our to-do API to an LLM:

First, install the MCP package:

pip install "mcp[cli]"Then create a file called todo_mcp_server.py:

from mcp.server.fastmcp import FastMCP

import requests # we'll use requests to call our REST API

# Initialize an MCP server with a descriptive name

mcp = FastMCP("To-Do API MCP Server")

# Define a Resource for reading tasks (like a GET endpoint)

@mcp.resource("todo://list")

def list_tasks() -> list:

"""Fetch all to-do tasks from the API."""

response = requests.get("http://localhost:5000/tasks")

response.raise_for_status()

return response.json() # return the list of tasks

# Define a Tool for adding a new task (like a POST operation)

@mcp.tool()

def add_task(title: str) -> dict:

"""Add a new task via the API and return the created task."""

payload = {"title": title}

response = requests.post("http://localhost:5000/tasks", json=payload)

response.raise_for_status()

return response.json() # return the new task data

if __name__ == "__main__":

mcp.run(transport="stdio")Let’s break down what’s happening:

- We create a

FastMCPinstance named"To-Do API MCP Server", which sets up the server and registers our tools/resources. - We use

@mcp.resource("todo://list")to expose a resource that returns the list of tasks. This maps to our API’s GET endpoint. - We use

@mcp.tool()to expose a tool that adds a new task. This maps to our API’s POST endpoint. - The docstrings for each function are important - the LLM will see these descriptions when deciding how to use the tools.

- We run the server with

mcp.run(transport="stdio"), which communicates using standard I/O (good for local development).

At this point, we have an MCP server with two capabilities: a resource todo://list (to get tasks) and a tool add_task (to create a task). These correspond to our API’s functionalities. In effect, we’ve wrapped the REST API with MCP.

Step 3: Run and test the MCP server locally

Before running the MCP server, make sure your Flask API is running (e.g., with flask run).

Now start the MCP server in development mode:

mcp dev ./todo_mcp_server.pyThe mcp dev command launches an interactive development dashboard in your browser. This dashboard lets you:

- See your MCP server’s name and available tools/resources

- Test the

todo://listresource - it should return the current list of tasks - Test the

add_tasktool - enter a task title and execute it - Verify results and see logs in the history panel

This dashboard is invaluable for development and debugging. It’s using the same MCP protocol that a real LLM client would use, but with a GUI for testing.

Step 4: Connect the MCP server to an LLM client

Now let’s hook up a live LLM to our MCP server. Several AI assistants and tools support MCP, including:

Connecting with Claude desktop

Anthropic’s Claude Desktop application has built-in support for local MCP servers. To integrate:

mcp install ./todo_mcp_server.pyThis registers your server with Claude. In Claude’s interface, you should see your “To-Do API MCP Server” available. Now when you have a conversation with Claude, you can:

- Ask: “List my to-do tasks” - Claude will use the

todo://listresource - Request: “Add a to-do item to buy milk” - Claude will use the

add_tasktool

Claude will handle running the MCP server and routing requests when the AI decides to use it.

Connecting with cursor IDE

Cursor is a code editor with an AI assistant that supports MCP. To connect:

- Go to Cursor’s settings under “Model Context Protocol”

- Add your MCP server by providing the path to

todo_mcp_server.py - Specify the transport (stdIo)

Once connected, Cursor will detect your server’s tools and resources. You can then ask the Cursor assistant questions like “What tasks are in my to-do list?” or “Add ‘write blog post’ to my tasks.”

General notes on LLM clients

The exact connection steps vary by client:

- For Claude Desktop, use

mcp installor the app’s UI - For Cursor, configure the server in settings

- Other environments may have different approaches

After connecting, test by prompting the AI in a way that encourages tool use. Many MCP clients will visually indicate when the AI uses a tool.

Security Note: When connecting an MCP server, the LLM will have access to whatever that server exposes. MCP servers typically run locally or within your infrastructure, which is good for security. If your API requires authentication, you can include those credentials in your MCP server code.

Comparing MCP with traditional approaches

Let’s compare how using MCP to expose an API differs from other integration methods:

Direct LLM API calls (No MCP)

Without MCP, you might:

- Use function calling or plugins that are platform-specific (tied to OpenAI or a specific environment)

- Embed API details in prompts, which is error-prone - the model might hallucinate endpoints or send incorrect formats

GraphQL or other query languages

With GraphQL, you could have the LLM construct queries, but:

- The model still needs to know the schema and query syntax

- You’d likely need a custom integration layer to verify the model’s queries

The MCP advantage

Using MCP abstracts these details into a standardized interface:

- The LLM doesn’t need to know HTTP methods or query syntax

- It queries the MCP server for available tools and resources, which have human-readable names and descriptions

- The process focuses on capabilities rather than low-level calls

- The same MCP server works for any client that supports the protocol

Here’s a detailed comparison:

Aspect Traditional API Integration Using MCP Integration Standardization No unified standard - each platform has its own integration method or plugin format One open standard across platforms - build once, use anywhere Discovery of Actions The LLM must be told what endpoints/queries are available, or they’re hard-coded LLM clients can programmatically list available tools/resources from an MCP server Data Access API responses often sent via prompts, hitting context limits MCP resources load data directly into the LLM’s context in a managed way Coding Effort High - custom HTTP calls, parsing, and error handling for each model Simplified - the MCP SDK handles protocol details; you write Python functions Security & Control The model might generate arbitrary HTTP calls if not constrained The MCP server acts as a gatekeeper - you decide exactly what’s exposed Flexibility Hard to adapt to new LLMs or use cases Very flexible - models can orchestrate multiple MCP calls; easily add new tools

In short, MCP offers a more AI-friendly layer on top of your API. Rather than dealing with raw HTTP calls, the model interacts with named actions and data that make sense in conversation.

Conclusion

Exposing your existing REST API to LLMs via MCP can greatly simplify integration. Instead of creating one-off connections for each AI platform, you implement the Model Context Protocol once and gain compatibility with any supporting client.

MCP provides a standardized, secure, and flexible way to bridge AI and your data. It turns your API into a modular component that any advanced AI can use responsibly and intelligently. This opens up new possibilities: your LLM-powered assistant could combine your API with other MCP connectors, all within the same unified framework.

FAQs

Yes. MCP is quite flexible – you can wrap any API or external service as long as you can write code to access it. Whether your API returns JSON, XML, or something else, you can process it in Python and return a Python data type (MCP will handle serializing it for the LLM).

The LLM itself doesn't inherently speak MCP – it's the combination of the model plus an MCP client that enables this. As of 2025, Claude, Cursor, Zed, Replit, and others are integrating MCP clients. There's also an official Python client library if you want to script an interaction between an LLM and an MCP server without a full UI client.

They address similar goals – letting an AI call external APIs – but MCP is an open standard and works across different AI systems. ChatGPT plugins are proprietary to OpenAI's ChatGPT interface, and function calling in GPT-4 is specific to OpenAI's API. One MCP server can serve many different models/clients.

MCP is still relatively new but rapidly maturing. In terms of security: because MCP allows an AI to execute code or retrieve data, treat your MCP server with the same caution as an API. Only expose what's necessary. The AI can only call the tools you've defined, nothing else. One advantage of MCP is you can run the server within your firewall or locally, reducing exposure.

Python currently has the most mature SDK, but MCP is language-agnostic. The protocol itself (which typically uses JSON-RPC over stdio or SSE) can be implemented in any language. There are SDKs and examples in other languages, including C# and JavaScript.

Absolutely. An MCP server can expose multiple tools and resources, even if they relate to different systems. You could create a single MCP server with tools for a To-Do API, a Weather API, and resources for a local database file. Alternatively, you might run multiple MCP servers (one per service) and connect them all to the client.