Improving Website Speed: Critical Rendering Optimizations

Whether you’re a front-end developer, a DevOps engineer, or a website owner, this comprehensive guide will provide you with the knowledge and tools necessary to tackle critical rendering path optimization effectively. It will ensure that your website delivers a lightning-fast experience to every visitor.

Discover how at OpenReplay.com.

In today’s digital landscape, website speed is an important factor that can make or break the user experience. Slow-loading websites not only frustrate visitors but also negatively impact key business metrics:

- bounce rate

- conversion rare

- search engine rankings

According to Google’s research, as page load time increases from 1 second to 10 seconds, the probability of a mobile site visitor bouncing increases by a staggering 123%.

Optimizing the critical rendering path (CRP) is a critical strategy that every web developer and website owner should prioritize to deliver lightning-fast websites that keep users engaged and satisfied. The critical rendering path is the sequence of steps that a browser follows to construct the initial render of a web page, including processing HTML, CSS, and JavaScript.

By optimizing the critical rendering path, websites can significantly reduce the time it takes to render the initial visible portion of a page, resulting in a smoother and more responsive user experience. This article will take us through the intricacies of the critical rendering path, exploring various techniques and best practices to streamline this process and ultimately improve website speed and performance.

Understanding the critical rendering path

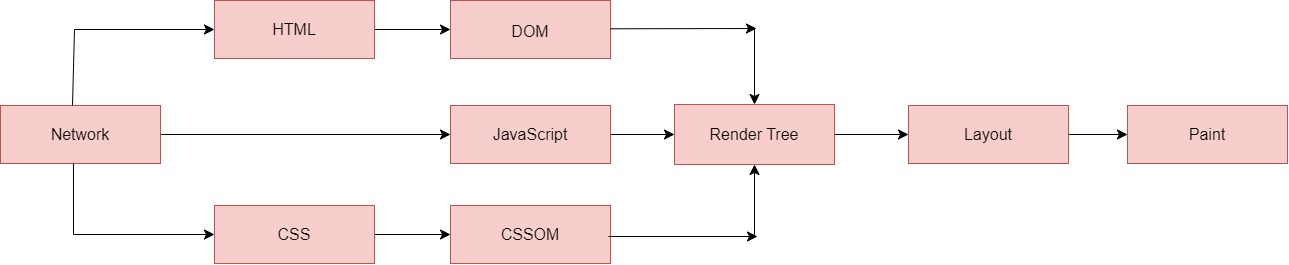

To optimize the critical rendering path effectively, it’s important to understand its underlying components and how they collectively impact website performance. The critical rendering path consists of three main components:

- HTML: The HTML markup serves as the foundation of a web page, defining its structure and content. When a browser receives the HTML file, it parses and constructs the Document Object Model (DOM), a hierarchical representation of the page’s elements.

- CSS: CSS (Cascading Style Sheets) controls the visual styles and layout of web page elements. The browser constructs the CSS Object Model (CSSOM) by parsing the CSS files referenced in the HTML.

- JavaScript: JavaScript adds interactivity, functionality, and dynamic behavior to web pages. The browser must parse and execute JavaScript code, which can potentially modify the DOM and CSSOM.

The critical rendering path is the sequence of steps the browser follows to process these components and render the initial visible portion of a web page:

- Parse HTML and construct the DOM tree

- Parse CSS and construct the CSSOM tree

- Parse JavaScript when the browser encounters

<script>tags in the HTML - Combine the DOM and CSSOM to create the render tree

- Run the layout process to compute the geometry of each node in the render tree

- Paint the individual nodes on the screen

We can represent these steps visually to provide a clear overview of the critical rendering path:

The image above depicts the steps the browser must take to convert the HTML, CSS, and JavaScript code into a rendered page that the user can interact with. This process is also often called the “pixel pipeline” because it describes the journey from parsing HTML, CSS, and JavaScript to rendering pixels on the screen.

Further, one of the most significant performance bottlenecks in the critical rendering path is render-blocking resources. Notably, these are CSS and JavaScript files that the browser must download, parse, and execute before it can render the page’s content. For instance, if a large, unoptimized JavaScript file is included at the top of the HTML file, it can significantly delay the rendering process, resulting in a poor user experience.

Optimizing the critical rendering path

Streamlining the critical rendering path is paramount to delivering lightning-fast web experiences that captivate users and drive business success. By implementing a strategic combination of techniques, developers can minimize render-blocking resources, leverage browser caching, optimize asset delivery, and utilize the power of modern web technologies.

Minimizing render-blocking resources

One of the most effective ways to accelerate the critical rendering path is to minimize render-blocking resources, particularly CSS and JavaScript files that can delay a web page’s initial rendering.

Firstly, we can inline the critical CSS. Generally, this involves embedding the minimal CSS required for rendering the above-the-fold content directly in the <head> section of the HTML document. This technique eliminates the need for an additional roundtrip to fetch external CSS files, resulting in faster initial rendering. Let’s look at an example:

<head>

<style>

/* Inlined critical CSS */

body {

font-family: Arial, sans-serif;

margin: 0;

}

header {

background-color: #333;

color: #fff;

padding: 20px;

}

/* ... */

</style>

<!-- Asynchronously load non-critical CSS -->

<link

rel="preload"

href="styles.css"

as="style"

onload="this.rel='stylesheet'"

/>

<noscript>

<link rel="stylesheet" href="styles.css" />

</noscript>

</head>In the example above, the critical CSS styles are inlined, while the non-critical styles are asynchronously loaded using rel=preload. Once the CSS file is loaded, this script changes the rel attribute of the <link> element from preload to stylesheet. This tells the browser that the CSS file is ready to be applied to the document. In addition, the <noscript> tag provides fallback content for browsers that don’t support JavaScript or have it disabled.

One other way to minimize render-blocking resources is to defer non-critical JavaScript. Render-blocking JavaScript can significantly impede the critical rendering path by forcing the browser to parse and execute the script before rendering the page content. To avoid this issue, we can defer the loading of non-critical JavaScript until after the initial render:

<body>

<!-- Page content -->

<!-- Defer non-critical JavaScript -->

<script src="analytics.js" defer></script>

<script src="non-critical.js" defer></script>

</body>In this example, we use the defer attribute to instruct the browser to download the non-critical JavaScript files in parallel while continuing to parse the HTML and construct the DOM. The deferred scripts will execute after the initial render, preventing them from blocking the critical rendering path.

Leveraging browser caching

Effective browser caching strategies can significantly reduce the load on the critical rendering path by minimizing the need to fetch resources from the server on subsequent page loads.

By setting appropriate cache-control headers, we can instruct browsers to cache static assets like CSS, JavaScript, and image files for an optimal duration, reducing the number of roundtrips required to fetch these resources. For instance, we can include in or modify the content of the Apache HTTP server configuration file, .htaccess the following code snippet:

# One year for assets with revisioned filenames

/assets/*.*.[0-9a-f][0-9a-f][0-9a-f][0-9a-f][0-9a-f][0-9a-f][0-9a-f][0-9a-f].*

Cache-Control: max-age=31536000, immutable

# One week for assets without revisioned filenames

/assets/*.*

Cache-Control: max-age=604800, public

# One day for HTML files

/*.html

Cache-Control: max-age=86400, public

# No caching for dynamic content

/api/*

Cache-Control: no-cache, no-store, must-revalidateIn this example, static assets with revisioned filenames (e.g., main.css?0a1b2c3d) are cached for one year with the immutable directive, while non-revisioned assets are cached for one week. HTML files are cached for one day, and dynamic content (e.g., API endpoints) is not.

Another approach to caching is using service workers. Further, it’s a powerful feature of modern browsers, enabling developers to implement advanced caching strategies, including offline support and intelligent resource caching and retrieval.

Here’s a simple service worker script:

// Cache name and assets to cache

const CACHE_NAME = "my-website-cache-v1";

const urlsToCache = [

"/",

"/index.html",

"/styles/main.css",

"/scripts/app.js",

"/images/logo.png",

];

// Install service worker and cache assets

self.addEventListener("install", (event) => {

event.waitUntil(

caches

.open(CACHE_NAME)

.then((cache) => cache.addAll(urlsToCache))

.then(() => self.skipWaiting())

);

});

// Serve cached assets

self.addEventListener("fetch", (event) => {

event.respondWith(

caches.match(event.request).then((response) => {

if (response) {

return response;

}

// Fetch from network if not cached

return fetch(event.request);

})

);

});

// Update service worker

self.addEventListener("activate", (event) => {

event.waitUntil(

caches.keys().then((cacheNames) => {

return Promise.all(

cacheNames.map((cacheName) => {

if (cacheName !== CACHE_NAME) {

return caches.delete(cacheName);

}

})

);

})

);

});To use this service worker, save the script as sw.js in our project directory.

Here’s a breakdown of the code:

- Cache name and assets to cache: The

CACHE_NAMEvariable stores the cache’s name, and theurlsToCachearray contains the URLs of the assets to be cached (e.g., HTML, CSS, JavaScript, and image files). - Install event: When the service worker is installed, it opens a cache with the specified

CACHE_NAMEand caches all the assets listed in theurlsToCachearray. Theself.skipWaiting()method immediately activates the updated service worker. - Fetch event: When we make a request, the service worker first checks if the requested asset is available in the cache. If it is, the cached response is returned. If not, the request is fetched from the network.

- Activate event: When the service worker is activated, it checks for any existing caches that are not the current

CACHE_NAMEand deletes them. This ensures that outdated caches are removed and the new cache is used.

This service worker is just a basic implementation of the caching strategy and production-ready service workers often include more advanced caching strategies, such as cache versioning, precaching, and strategies for handling different types of requests (e.g., navigations, API calls, etc.).

Then, you will register the service worker in your main JavaScript file or HTML file:

// Register the service worker

if ("serviceWorker" in navigator) {

navigator.serviceWorker

.register("/sw.js")

.then(function (registration) {

console.log("Service worker registered successfully");

})

.catch(function (error) {

console.log("Service worker registration failed:", error);

});

}This code registers the service worker and specifies the scope of its control. In this case, it’s registered at the root level, meaning it’ll control all pages within its scope.

Image optimization

Images are an essential part of modern web experiences, but they can also be a significant performance bottleneck if not optimized properly. Unoptimized images can significantly increase page weight, leading to longer load times and a degraded user experience.

Firstly, serving images at their intended display size and optimizing their compression levels can drastically reduce file sizes and improve load times. You can achieve this through various techniques:

- Resizing images to their intended display dimensions: Serving images larger than necessary wastes bandwidth and increases load times.

- Optimizing image compression levels: Finding the right balance between image quality and file size through appropriate compression settings.

- Using appropriate image formats: Choosing the right image format (e.g., JPEG for photographs, PNG for graphics with transparent backgrounds, SVG for vector graphics) can result in smaller file sizes without compromising quality.

Also, modern image formats like WebP and AVIF offer superior compression capabilities compared to traditional formats like JPEG and PNG, resulting in significantly smaller file sizes without compromising quality. Therefore, we can use the <picture> element and its <source> children to enable the browser to choose the most appropriate image format based on its support:

<picture>

<source srcset="image.webp" type="image/webp" />

<source srcset="image.avif" type="image/avif" />

<img src="image.jpg" alt="Fallback Image" />

</picture>With the above code, if the browser supports WebP or AVIF, it’ll load the corresponding image format, resulting in faster load times. If neither format is supported, the browser will fall back to the traditional JPEG image.

Lazy loading is another technique to optimize image rendering. Further, this process defers the loading of off-screen images until they are needed, reducing the initial page weight and thereby improving the critical rendering path:

<img data-src="actual-image.jpg" alt="Lazy Loaded Image" loading="lazy">In this example, the loading="lazy" attribute instructs the browser to lazy load the image specified in the data-src attribute. This attribute is often used in conjunction with lazy loading techniques. The image is loaded asynchronously only when it’s about to be displayed on the screen or when it’s needed. The user scrolling typically triggers this to it or when it’s close to entering the viewport.

Implementing server-side rendering (SSR) or static site generation (SSG)

Traditional client-side rendering (CSR) approaches may not be sufficient in some cases. In such scenarios, developers can leverage server-side rendering(SSR) or static site generation (SSG) techniques to enhance performance further.

Server-side rendering involves generating the initial HTML, CSS, and JavaScript on the server and sending the fully rendered page to the client. This approach eliminates the need for the client to construct the initial render, significantly reducing the critical rendering path and improving perceived load times.

SSR is particularly beneficial for content-heavy websites, such as news portals, blogs, or e-commerce platforms, where the initial page load is crucial for user experience and search engine optimization (SEO).

Also, static site generation is a technique where the entire website is pre-rendered at build time, resulting in a collection of static HTML, CSS, and JavaScript files that can be served directly from a content delivery network (CDN) or a web server.

Measuring and monitoring performance

Optimizing the critical rendering path is an ongoing process that requires continuous monitoring and measurement to ensure that performance gains are sustained and identify new improvement areas. These enable the developers to gain valuable insights and make data-driven decisions to enhance website speed and user experience.

Web performance metrics to track

Several performance metrics are directly related to the critical rendering path and should be closely monitored:

- Page load time: The time it takes for the entire web page, including all resources, to be loaded and rendered in the browser. This metric provides an overall measure of website performance and user experience.

- Time to First Byte (TTFB): The time it takes for the browser to receive the first byte of data from the server after initiating a request. A high TTFB can indicate server-side performance issues, network latency, or other bottlenecks impacting the critical rendering path.

- First Contentful Paint (FCP): The time it takes for the browser to render the first piece of content from the DOM, such as text, images, or non-white canvas elements. This metric measures the perceived load speed and is an important factor in user experience.

- Largest Contentful Paint (LCP): The time it takes for the largest content element (e.g., a hero image or a text block) to be rendered on the screen. A slow LCP can negatively impact user experience, especially on mobile devices.

- Cumulative Layout Shift (CLS): A measure of the visual stability of a web page, quantifying the amount of unexpected layout shifts that occur during the page’s lifecycle. Minimizing CLS is crucial for providing a smooth and stable user experience.

Tracking these metrics helps developers to identify potential bottlenecks in the critical rendering path and prioritize optimization efforts accordingly.

Performance testing tools

Numerous tools are available to assist developers in measuring and analyzing website performance, including:

- Browser developer tools: Modern web browsers have powerful developer tools that provide insights into page load times, network requests, rendering performance, and more. These tools are invaluable for identifying and debugging performance issues.

- Online testing tools: Services like WebPageTest, Lighthouse, and PageSpeed Insights offer comprehensive performance testing and analysis capabilities. These tools simulate real-world user conditions and provide detailed reports on various performance metrics, including those related to the critical rendering path.

- Synthetic monitoring tools: Services like Pingdom, Uptime Robot, and New Relic Synthetics enable developers to set up synthetic monitoring for their websites, continuously testing and reporting on performance from different geographic locations and simulated user conditions.

By leveraging these testing tools, developers can comprehensively understand their website’s performance, identify bottlenecks in the critical rendering path, and make important and necessary decisions on optimization strategies.

Continuous performance optimization

As websites evolve and new features are added, it’s essential to regularly audit and optimize the critical rendering path to maintain fast and responsive user experiences.

Regularly auditing and optimizing the critical rendering path

Periodically conducting comprehensive performance audits is germane for identifying and addressing potential bottlenecks in the critical rendering path. These audits should involve:

- Analyzing performance metrics: Regularly monitoring key performance metrics, such as those discussed in the previous section, can help identify performance regressions or areas that require further optimization.

- Running performance tests: Utilizing various performance testing tools, like WebPageTest, Lighthouse, and browser developer tools, can provide valuable insights into the critical rendering path and pinpoint specific areas for improvement.

- Reviewing code changes: As new features are developed and code is added or modified, it is important to review the impact on the critical rendering path and ensure that performance optimizations are not inadvertently undone.

- Updating optimization techniques: Web performance best practices and optimization techniques constantly evolve. Staying up-to-date with the latest developments and adopting new techniques can help maintain optimal performance.

For example, consider a scenario where a performance audit reveals that a newly added third-party script is causing render-blocking delays. By deferring the script’s execution or implementing code-splitting, developers can mitigate the impact on the critical rendering path and maintain fast load times.

Implementing a performance budget and monitoring process

To maintain a consistent focus on performance optimization, it’s beneficial to establish a performance budget and implement a monitoring process. Basically, a performance budget defines the maximum acceptable thresholds for various performance metrics, such as page weight, load times, and resource counts.

By setting a performance budget and continuously monitoring adherence to it, developers can proactively identify and address performance issues before they become significant problems. This approach encourages a culture of performance awareness and helps prioritize optimization efforts throughout the development lifecycle.

Collaborating with cross-functional teams

Optimizing the critical rendering path often requires collaboration across various teams, including developers, designers, and DevOps engineers. By promoting open communication and sharing performance insights, these teams can work together to identify and address performance bottlenecks holistically.

For example, designers can ensure that visual elements are optimized for performance, developers can implement efficient rendering techniques, and DevOps engineers can configure servers and content delivery networks (CDNs) for optimal asset delivery.

Continuous performance optimization is an ongoing commitment that requires a concerted effort from all stakeholders involved in developing and delivering a website. By regularly auditing, implementing best practices, setting performance budgets, and encouraging effective collaboration, organizations can ensure that their websites remain fast, responsive, and deliver exceptional user experiences over time.

Conclusion

Optimizing the critical rendering path has become an important strategy for delivering lightning-fast, responsive websites that captivate users and drive business success. Developers can significantly improve website speed and performance by understanding the underlying components of the critical rendering path and implementing a range of optimization techniques.

Throughout this comprehensive article, we have explored various strategies to streamline the critical rendering process, from minimizing render-blocking resources and leveraging browser caching to optimizing CSS and JavaScript delivery. We have also discussed the importance of measuring and monitoring performance, as well as the continuous efforts required to maintain optimal website speed.

Understand every bug

Uncover frustrations, understand bugs and fix slowdowns like never before with OpenReplay — the open-source session replay tool for developers. Self-host it in minutes, and have complete control over your customer data. Check our GitHub repo and join the thousands of developers in our community.