Optimizing gRPC calls through caching with Redis

Node.js has become a cornerstone in modern microservices architectures, powering the server-side logic for high-performance applications. As developers strive for optimal performance, integrating gRPC services has brought efficiency to communication between microservices. However, the journey doesn’t end there. This article will show how to use Redis, a robust in-memory data store and caching solution, and learn how to strategically cache data on the server side to amplify the speed of gRPC-powered microservices.

Discover how at OpenReplay.com.

gRPC (gRPC Remote Procedure Call) is a cutting-edge framework that revolutionizes inter-service communication in modern microservice architectures. It is designed for efficiency, performance, and ease of use, enabling smooth communication between services regardless of the programming languages they are implemented in.

Moving forward, we will harness the power of gRPC, utilizing it as the backbone for building a Book Catalog application. This application will serve as our testing ground to explore the potential of gRPC in Node.js and then implement Redis for efficient server-side caching and speed enhancements.

To follow along in this tutorial, all you need is Node and Redis installed on your machine. This tutorial uses Node version v18.17.1 and Redis version v7.2.4 on a Linux machine running an Ubuntu 22.04LTS distribution.

The code snippets used in this article can be found in this GitHub repository.

Setting Up a Book Catalog Service

In gRPC, services represent the collection of methods a server can expose; this allows clients to make remote procedure calls (RPCs) to perform specific actions or obtain information. Each service is defined using Protocol Buffers (ProtoBuf) that outlines the structure of the messages exchanged between the client and the server. These service definitions, encapsulated within Protocol Buffers files, define the available methods and the data structures exchanged during remote calls. This careful depiction of services forms the backbone of gRPC’s efficiency and ease of use.

To begin, navigate to the directory where you want to create your Node.js project and run the following commands:

mkdir book-catalog-service

cd book-catalog-service

npm init -yInstall the necessary dependencies for gRPC (gRPC module and the Protocol Buffers loader for Node.js) using npm :

npm install @grpc/grpc-js @grpc/proto-loaderCreate a’ protos’ directory to store Protocol Buffers (ProtoBuf) files. In this directory, create a file named book.proto with the following content:

syntax = "proto3";

service BookCatalog {

rpc ListBooks (ListBooksRequest) returns (ListBooksResponse);

rpc GetBookDetails (GetBookDetailsRequest) returns (BookDetailsResponse);

}

message BookInfo {

int32 id = 1;

string title = 2;

string author = 3;

string publication_year = 4;

}

message ListBooksRequest {

}

message ListBooksResponse {

string total_books = 1;

repeated BookInfo book_info = 2;

}

message GetBookDetailsRequest {

int32 id = 1;

}

message BookDetailsResponse {

string isbn = 1;

string genre = 2;

string description = 3;

}This will define a set of messages for our book catalog application, and below is a high-level explanation of what is contained within this file:

- The version of Protocol Buffers used -

Proto3 syntax. - A service definition

BookCatalogwith two RPC methods defined -ListBooksandGetBookDetailsspecifies the operations clients can perform on the Book Catalog service. - Message definitions:

BookInfo: Representing the information about a book (ID (unique identifier), title, author, and publication year).ListBooksRequest: Placeholder for any potential request parameters when a client calls theListBooksRPC.ListBooksResponse: Representing the response structure when a client requests a list of books. It includes a string of total books and an array ofBookInfoelements.GetBookDetailsRequest: Representing the request structure when a client calls theGetBookDetailsRPC. Including the unique identifierbook_idof the book for which details are requested.BookDetailsResponse: Representing the response structure when a client requests details for a specific book. It includes information such as ISBN, genre, and description.

Implementing the BookCatalog Service logic

With the structure and definitions in our book.proto file set, it’s time to implement the BookCatalog service logic. This will involve creating a basic gRPC server that handles the gRPC operations specified in the book.proto file.

Create a file - mkdir -p src && touch src/books.json and populate it with the following JSON data.

This JSON data will serve as the initial set of books for our Book Catalog Service.

Create a file server.js in the same directory as the books.json and paste in the following piece of code:

const grpc = require("@grpc/grpc-js");

const protoLoader = require("@grpc/proto-loader");

const fs = require("fs");

const path = require("path");

const PROTO_PATH = path.join(__dirname, "../protos/book.proto");

const BOOKS_JSON_PATH = path.join(__dirname, "./books.json");

const packageDefinition = protoLoader.loadSync(PROTO_PATH, { keepCase: true });

const bookCatalogProto = grpc.loadPackageDefinition(packageDefinition);

const server = new grpc.Server();

server.addService(bookCatalogProto.BookCatalog.service, {

ListBooks: listBooks,

GetBookDetails: getBookDetails,

});

server.bindAsync(

"0.0.0.0:50000",

grpc.ServerCredentials.createInsecure(),

(err, port) => {

if (err) {

console.error("Error binding server:", err);

throw err;

}

console.log(`Server bound at http://0.0.0.0:${port}`);

server.start();

},

);

This will create the foundational structure for our gRPC server to handle the operations specified in the book.proto file. So we are initializing the server, setting up the communication protocol, defining the methods the server will respond to (ListBooks and GetBookDetails), and then finally, we bind the server to a specific address and port so it can receive and process requests.

Next, create supporting functions retrieveBooksFromDatabase and retrieveBookDetailsFromDatabase for retrieving books’ information:

function retrieveBooksFromFile(callback) {

fs.readFile(BOOKS_JSON_PATH, "utf-8", (err, booksData) => {

if (err) {

console.error("Error reading books file:", err);

return callback(err);

}

try {

const books = JSON.parse(booksData);

callback(null, books || []);

} catch (parseErr) {

console.error("Error parsing books file:", parseErr);

callback(parseErr);

}

});

}

function retrieveBookDetailsFromFile(bookId, callback) {

retrieveBooksFromFile((err, books) => {

if (err) {

return callback(err);

}

const bookDetails = books.find((book) => book.id === Number(bookId));

if (bookDetails) {

const response = {

isbn: bookDetails.isbn,

genre: bookDetails.genre,

description: bookDetails.description,

};

callback(null, response);

} else {

callback({ code: grpc.status.NOT_FOUND, details: "Book not found" });

}

});

}These functions will be responsible for accessing and retrieving data from the books.json file, with the retrieveBooksFromFile function designed to read and parse the entire books.json file and retrieveBookDetailsFromFile for extracting details of a single book identified by bookId.

Next, define a function listBooks, that’ll be responsible for retrieving the entire list of books from the books.json file and send this information back to the client as a response:

function listBooks(_, callback) {

retrieveBooksFromFile((err, books) => {

if (err) {

callback({

code: grpc.status.INTERNAL,

details: "Error listing books",

});

} else {

const response = {

total_books: books.length.toString(),

book_info: books.map((book) => ({

id: book.id,

title: book.title,

author: book.author,

publication_year: book.publication_year,

})),

};

callback(null, response);

}

});

}This function will serve as a critical part of our gRPC service, as it corresponds to the ListBooks RPC call defined in the book.proto file.

Finally, let’s define a function getBookDetails which will be responsible for handling requests for detailed information about a specific book:

function getBookDetails(call, callback) {

const { id } = call.request;

retrieveBookDetailsFromFile(id, (err, bookDetails) => {

if (err) {

callback({

code: grpc.status.INTERNAL,

details: "Error getting book details",

});

} else {

callback(null, bookDetails);

}

});

}This function will take the book ID book_id from the client’s request, use it to fetch the corresponding details from the books.json file, and then respond with detailed information about the specified book. This function is integral to our gRPC service, as it directly corresponds to the GetBookDetails RPC method defined in the book.proto file.

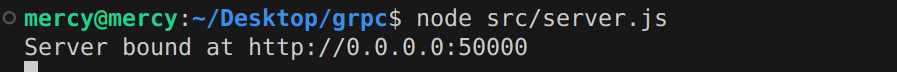

Now proceed to run the server with the following command - node src/server.js and if all goes well, you should see this:

Running the Client

With our server set up, we’ll now focus on executing the client side of our application. The client’s role is interacting with the gRPC server, sending requests, and receiving responses. Create a file client.js in the same directory as the server.js and paste in the following code snippets:

const grpc = require("@grpc/grpc-js");

const protoLoader = require("@grpc/proto-loader");

const path = require("path");

const PROTO_PATH = path.join(__dirname, "../protos/book.proto");

const packageDefinition = protoLoader.loadSync(PROTO_PATH);

const bookCatalogProto = grpc.loadPackageDefinition(packageDefinition);

const client = new bookCatalogProto.BookCatalog(

"localhost:50000",

grpc.credentials.createInsecure(),

);

function listBooks() {

client.ListBooks({}, (error, response) => {

if (!error) {

console.log("List of Books:", JSON.stringify(response, null, 2));

} else {

console.error("Error:", error);

}

});

}

function getBookDetails(bookId) {

client.GetBookDetails({ id: bookId }, (error, response) => {

if (!error) {

console.log("Book Details:", JSON.stringify(response, null, 2));

} else {

console.error("Error:", error);

}

});

}

listBooks();

getBookDetails(8);Here, we are setting up a gRPC client to communicate with our gRPC server. We’re also configuring the client to request all books and then details for the eighth book, specifically using the ID 8.

Run the client using the following command - node src/client.js: if all goes well, you should have the list of books and the details of the book with the ID 8:

// lists of books

List of Books: {

"totalBooks": "15",

"bookInfo": [

{

"id": 1,

"title": "The Catcher in the Rye",

"author": "J.D. Salinger",

"publicationYear": "1951"

},

...

// book details for book with id 8

Book Details: {

"isbn": "9780140268867",

"genre": "Epic Poetry",

"description": "An ancient Greek epic poem about Odysseus' journey home."

}This confirms that our client is running as we expect it to.

Integrating Redis

Subsequently, we have successfully set up a basic Book Catalog service with a corresponding client. However, when the number of requests may increase, we have to consider performance, which is where redis comes in. In this section, we’ll integrate Redis into our BookCatalog service so we can cache frequently requested data thereby reducing response times and server load.

Open up a fresh terminal window and spin up your redis server with the command - redis-cli.

Install the redis client for Node using the following command:

npm i redisWith redis installed, we can integrate it into our application. The goal is to cache the responses of our ListBooks and GetBookDetails methods to improve response times for frequently accessed book data.

Create a file at the root of your directory called redis.js and add the following code:

const redis = require("redis");

const redisClient = redis.createClient({

url: "redis://localhost:6379",

});

redisClient.on("error", (err) => {

console.error("Redis Client Error", err);

});

redisClient.connect();

module.exports = redisClient;This will initialize a redis client that connects to the redis server running on our machine and export it as a module so our server can use it.

Implementing Cache Hit and Miss

After a successful connection to our redis server, the first strategy for optimizing our gRPC book catalog service is to implement the concept of cache hit and miss.

A cache hit occurs when the requested data is already stored in the cache and can be served directly to the client. A cache miss happens when the requested data is not in the cache.

Import the redis client module in your server.js file:

const redisClient = require("../redis");Doing this will allow us to interact with the redis server within our gRPC service handlers.

Next, we’ll need to create a function readAndCacheBooks that encapsulates the logic for reading book data from the books.json file and caching it in Redis.

This function aims to ensure that both the entire list of books and the details of individual books are cached and books are only fetched from the file and cached when they are not already present in the redis cache:

async function readAndCacheBooks() {

console.log("Fetching books from file");

try {

const booksData = fs.readFileSync(BOOKS_JSON_PATH, "utf-8");

const books = JSON.parse(booksData);

await redisClient.set("books", JSON.stringify(books));

for (const book of books) {

await redisClient.set(`bookDetails:${book.id}`, JSON.stringify(book));

}

return books;

} catch (err) {

console.error("Error reading or parsing books file:", err);

throw err;

}

}Next, we integrate cache checks into our service handlers - retrieveBooksFromFile and retrieveBookDetailsFromFile.

retrieveBooksFromFile:

async function retrieveBooksFromFile(callback) {

const cachedBooks = await redisClient.get("books");

if (cachedBooks) {

console.log("Books found in cache");

return callback(null, JSON.parse(cachedBooks));

} else {

const books = await readAndCacheBooks();

callback(null, books);

}

}retrieveBookDetailsFromFile:

async function retrieveBookDetailsFromFile(bookId, callback) {

const cachedDetails = await redisClient.get(`bookDetails:${bookId}`);

if (cachedDetails) {

console.log(`Details for book ID: ${bookId} found in cache`);

return callback(null, JSON.parse(cachedDetails));

}

console.log(`Fetching details for book ID: ${bookId} from file`);

let books;

const cachedBooks = await redisClient.get("books");

if (cachedBooks) {

books = JSON.parse(cachedBooks);

} else {

books = await readAndCacheBooks();

}

const bookDetails = books.find((book) => book.id === Number(bookId));

if (bookDetails) {

const response = {

isbn: bookDetails.isbn,

genre: bookDetails.genre,

description: bookDetails.description,

};

await redisClient.set(`bookDetails:${bookId}`, JSON.stringify(response));

callback(null, response);

} else {

console.log(`Book ID: ${bookId} not found in file`);

callback({ code: grpc.status.NOT_FOUND, details: "Book not found" });

}

}When we receive requests in our gRPC server, these functions will first attempt to retrieve the requested data from the redis cache. So:

- When a request is received, the server checks if the requested data (list of books) is available in the cache.

- If the data is found (cache hit), it is immediately returned to the client.

- If the data is not in the cache (cache miss), the server reads it from the

books.jsonfile. After retrieving the data, it is stored in theredis cache.

Now, in our client, we’ll need to calculate response times to get an idea of how long our requests for the lists of books and book details took.

Edit the listBooks and getBookDetails functions to look like the following:

function listBooks() {

const start = Date.now();

client.ListBooks({}, (error, response) => {

const duration = Date.now() - start;

if (!error) {

console.log("List of Books:", JSON.stringify(response, null, 2));

} else {

console.error("Error:", error);

}

console.log(`Response time for ListBooks: ${duration}ms`);

});

}

function getBookDetails(bookId) {

const start = Date.now();

client.GetBookDetails({ id: bookId }, (error, response) => {

const duration = Date.now() - start;

if (!error) {

console.log("Book Details:", JSON.stringify(response, null, 2));

} else {

console.error("Error:", error);

}

console.log(`Response time for GetBookDetails: ${duration}ms`);

});

}Restart your server, run your client for the first time, and take note of the response times:

Response time for ListBooks: 45s

Response time for GetBookDetails: 43msWhen you view your server, you’ll get the following response, which is expected:

Fetching books from file

Fetching details for book ID: 8 from fileNow run your client for the second time and take note of the response times:

Response time for GetBookDetails: 33ms

Response time for ListBooks: 31msWhen you view the server, you get the following response, which is also expected:

Books found in cache

Details for book ID: 8 found in cacheWhen you request only the details of a book with a different id as in getBookDetails(5) from your client, you have a response from the cache like so, which is the expected response:

Details for book ID: 5 found in cacheAt this point, you have successfully implemented your first caching strategy - cache hit and miss.

Implementing Cache Refresh

Implementing cache hit-and-miss is an important first step, but it doesn’t guarantee the ongoing accuracy and relevance of the cached data. To address this, the next strategy we’ll be implementing is a cache refresh. This approach ensures that the data served from the cache remains accurate and relevant over time.

In your server.js, start by defining the TTL for your cache entries:

const CACHE_TTL = 120; This will set cache time to live (TTL) to 120 seconds. This means cached data will expire 120 seconds (two minutes) after being set.

Next, we need to update our caching logic to include this TTL:

async function readAndCacheBooks() {

console.log("Fetching books from file");

try {

const booksData = fs.readFileSync(BOOKS_JSON_PATH, "utf-8");

const books = JSON.parse(booksData);

await redisClient.SETEX("books", CACHE_TTL, JSON.stringify(books));

for (const book of books) {

await redisClient.SETEX(

`bookDetails:${book.id}`,

CACHE_TTL,

JSON.stringify(book),

);

}

return books;

} catch (err) {

console.error("Error reading or parsing books file:", err);

throw err;

}

}This will cache the list of books and individual book details with the same TTL, defined by CACHE_TTL.

Refresh your redis server so the TTL will be set for all keys - books and bookDetails using the FLUSHALL command. This will remove all keys from all databases, effectively resetting your redis server. Once this is successful, you should have an OK response:

OKAfter using

FLUSHALL, you can repopulate your Redis server with fresh data, and the TTL for new keys will be set according to your subsequent commands. Be sure to use theDEL [key]command to delete selected keys instead.

Restart your server and run your client to fetch only the list of books:

listBooks()

// getBookDetails(5);Running this for the first time will yield the following response:

Fetching books from file

Books found in cacheBut running this after 120 seconds will yield this:

Fetching books from fileIndicating that the cache has expired and the data is being refreshed and recached.

Implementing Cache Invalidation

The next and final strategy we’ll be looking at is cache invalidation. This means maintaining the accuracy and relevance of the data served from the cache. This is mostly triggered by an event (event-driven), so we’ll create an event that’ll trigger a cache invalidation.

Let’s implement a delete feature to handle cache invalidation in our gRPC service.

Define a method for deleting a book in your book.proto file:

service BookCatalog {

// ... previous service methods ...

rpc DeleteBook(DeleteBookRequest) returns (DeleteBookResponse);

}

message DeleteBookRequest {

int32 id = 1;

}

message DeleteBookResponse {

string message = 1;

}Head to your server.js file and add the gRPC service handler for deleting a book:

server.addService(bookCatalogProto.BookCatalog.service, {

// ... previous handlers ...

DeleteBook: deleteBook,

});Add the following function to delete a book:

async function deleteBook(call, callback) {

const bookId = call.request.id;

try {

const booksData = fs.readFileSync(BOOKS_JSON_PATH, "utf-8");

let books = JSON.parse(booksData);

const bookIndex = books.findIndex((book) => book.id === bookId);

if (bookIndex === -1) {

return callback({

code: grpc.status.NOT_FOUND,

details: "Book not found",

});

}

books.splice(bookIndex, 1);

fs.writeFileSync(BOOKS_JSON_PATH, JSON.stringify(books, null, 2));

await redisClient.del("books");

const keys = await redisClient.keys("bookDetails:*");

for (const key of keys) {

await redisClient.del(key);

}

callback(null, { message: "Book deleted successfully" });

} catch (err) {

console.error("Error processing delete request:", err);

callback({

code: grpc.status.INTERNAL,

details: "Failed to delete the book",

});

}

}Here we are:

- Checking first if the book with the given

IDexists in our books file (books.json). If the book is found, we remove it from the array of books and then write the updated array back to thebooks.jsonfile. - Invalidate the cache after successfully deleting the book from the file by deleting the book’s key and all keys matching the pattern

bookDetails:*. This ensures that the next request for the list of books and subsequent requests for book details will fetch an updated list from the file and cache it anew.

Head over to your client and implement a function to call the delete method on the server:

function deleteBook(bookId) {

client.DeleteBook({ id: bookId }, (error, response) => {

if (error) {

console.error("Error:", error);

} else {

console.log(response.message);

}

});

}

deleteBook(4);Restart your server, and run your client calling only the listBooks() function and observe the responses from your server:

Books found in cacheNow call only the deleteBook(4) function to delete a book with the id 4 and observe the response from your client:

Book deleted successfullyFinally, call the getBookDetails(10) function to get a book with the ID 10and observe the response on your server:

Fetching details for book ID: 10 from file

Fetching books from fileThis means that a cache invalidation was triggered after the deletion, so any request, either getting the details of a book or getting the list of books, is fetched directly from the books.json file and then cached anew. This way, the cache is kept up-to-date with the most current data, ensuring that any deleted books are not erroneously served from the cache in future requests.

By implementing this delete feature, we have effectively implemented event-driven cache invalidation in our gRPC service.

Conclusion

In this guide, we explored various server-side caching strategies with Redis to optimize our gRPC service in our Node.js application. We implemented cache hit and miss, cache refresh by implementing TTL, and then event-driven cache invalidation.

I believe you can agree with me that these caching strategies not only boosted the performance of our gRPC service but also enhanced the overall user experience by providing faster and more accurate responses. You can go ahead and implement these strategies, too, and be on your way to handling complex modern data management gracefully and effectively.