Vitest: a powerful testing framework for Vite

Vite changes the game by offering blazing-fast dev times that make a bundler unnecessary during development. Nevertheless, it can be hard to write tests for using a framework such as Jest because of compatibility problems and complex setups. This article introduces Vitest, an advanced modern test runner built for Vite projects with the right defaults and integration within your developer workflow.

Discover how at OpenReplay.com.

Vitest is a powerful test-runner specifically aimed at Vite projects. It seamlessly fits into Vite’s workflow without requiring complex configuration. This can potentially mean huge efficiencies for developers, who can write their tests without needing to worry about setup details. Based on the output, this test runner is streamlined to work directly with Vite, and no configuration headaches. It uses modern JavaScript features (ESM imports, top-level await) to test and provides a clean approach. It enables Vite developers to easily write efficient and effective tests by simplifying the process and supporting modern features.

Comparing the Runner with Jest for Vite Projects

Selecting a good testing framework is very important for your Vite project. Finally, here is a runner vs. Jest comparison to let you decide on your own:

| Feature | Vitest | Jest |

|---|---|---|

| Native Integration | Integrated seamlessly with how Vite works | Requires extra configuration, but eventually it’s the same as using rollup plugin |

| Modern Syntax Support | Supports ESM imports, top-level await | Limited support for modern JavaScript syntax |

| Monorepo Friendly | Workspaces for efficient testing in monorepos | Can be cumbersome in complex monorepo setups |

| Extensibility | Extend functionality through plugins | Limited extensibility options |

| TypeScript/JSX Support | Integrates seamlessly with TypeScript/JSX | Requires additional configuration |

| In-Source Testing | Optional: Keep tests close to the code | Not supported |

| GUI Dashboard | Optional: Visualize test results | Not available |

| Handling Test Flakes | Automatic retries for flaky tests | Requires manual configuration or 3rd party libs |

The table conveys the essential distinctions between the test runner specialized in workflow resolution and a traditional one.

Getting Started with the Runner

This section walks you through how to set up a modern test runner for your project. To walk you through every step, we will use a simple example. After setting the test runner, you have multiple ways to perform tests on it using your code. In this article, we’ll explore two common testing scenarios: testing basic functions and testing API calls.

Testing Basic Functions

Let’s test two basic functions, such as adding two numbers. This is a good way to get started with the test runner, especially if you are new to testing or working on simple projects. The steps take you through creating a project, installing the test runner, writing a script, and running it.

- Setting Up a Vite Project (Optional): If you don’t have a project yet, you can quickly create one using the official Vite CLI:

npm init vite@latest my-vite-project

cd my-vite-projectThis creates a new project named my-vite-project with the default settings.

2. Install the framework: Add the test runner as a development dependency using npm install:

npm install --save-dev vitestThis installs the framework and adds it to your package.json file under the devDependencies section.

3. Writing Basic Tests: Let’s create a test file named math.test.js to demonstrate writing tests. Here’s the code:

// math.test.js

// Import necessary functions for testing from Vitest

import { expect, test } from 'vitest';

// Define a function named sum that takes two numbers (a and b) as arguments

// and returns their sum

function sum(a, b) {

return a + b;

}

// Create a test using the `test` function from Vitest

test('adds two numbers', () => {

// Inside the test function, we define what we want to test

// The first argument is a description of the test

// Use the `expect` function to make assertions about the result

// We expect the sum of 2 and 3 to be 5

expect(sum(2, 3)).toBe(5);

});This code snippet defines a test to ensure that the sum function correctly adds two numbers. It imports the necessary testing functions, defines a sum function that adds two numbers and uses assertion methods to verify that sum(2, 3) returns 5.

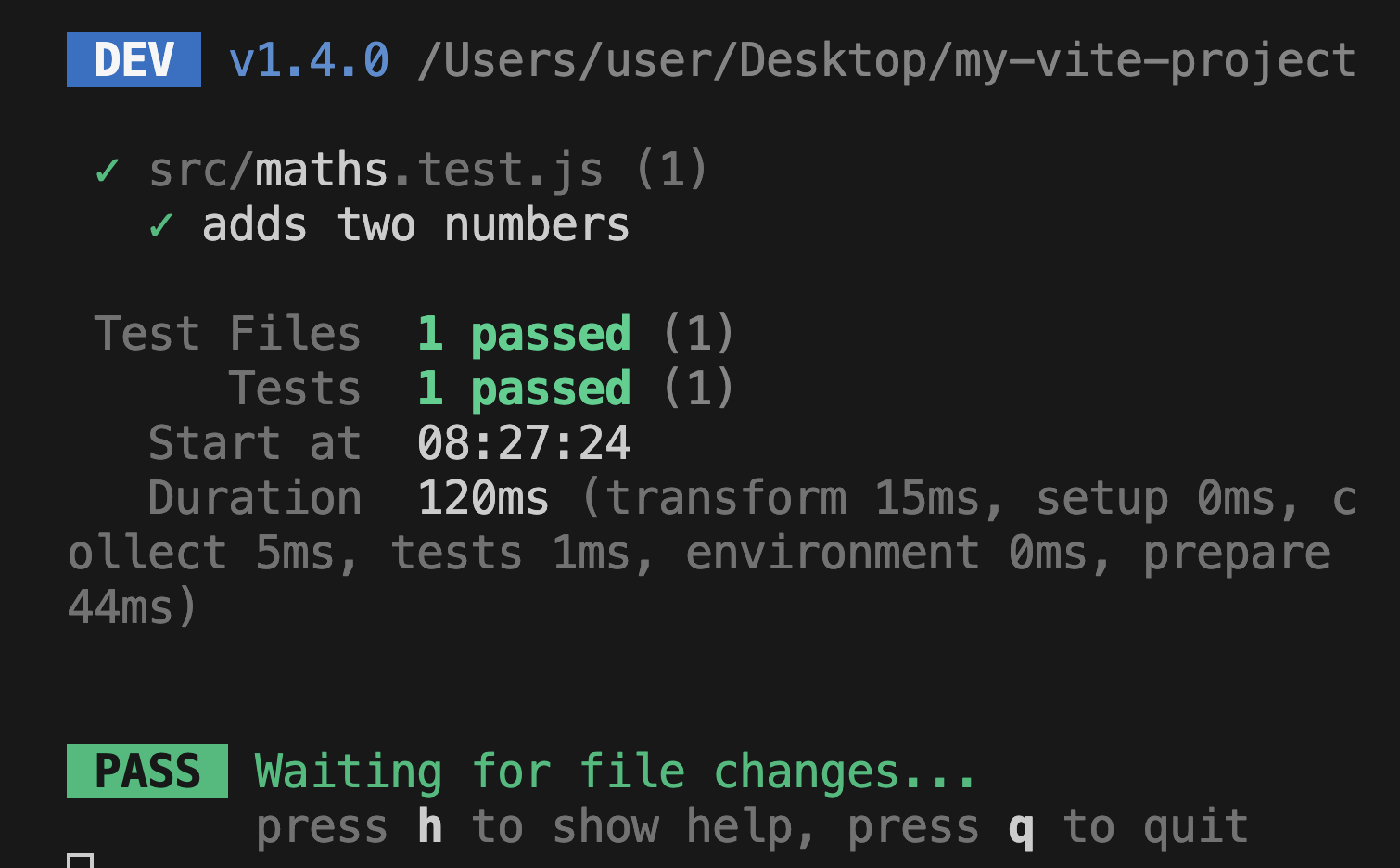

4. Running Tests: To run the test, execute the following command in your terminal:

npx vitestThe test runner will execute the test in math.test.js and report the results. If the assertion passes, you’ll see a success message. If it fails, you’ll see an error message indicating the issue.

The test report provides a quick overview of the test results, including the number of tests passed and failed, along with the total duration of the test run. This helps developers identify any issues in their code.

5. Integrating with Vite Build Pipeline: The beauty of this framework lies in its seamless integration with Vite’s existing build pipeline. By default, Vite automatically recognizes test files with extensions like .test.js or .spec.js. Vite executes these test files alongside your application code during the build process. This ensures your tests run when building your production application, catching potential issues before deployment.

Testing API Calls

When working with real-world applications, testing API interactions is crucial. Let’s explore how to test various API operations using our test runner. We’ll start by creating a comprehensive userAPI.js file that includes multiple HTTP methods and error handling:

First, let’s look at the getUser function:

// userAPI.js

async getUser(userId) {

const response = await fetch(`https://api.example.com/users/${userId}`);

if (!response.ok) {

throw new Error('Failed to fetch user');

}

return response.json();

}This method fetches a user by their ID. It sends a GET request to the API endpoint, checks if the response is successful, and returns the parsed JSON data. If the response is not OK, it throws an error.

Next, let’s examine the createUser function:

// userAPI.js

async createUser(userData) {

const response = await fetch('https://api.example.com/users', {

method: 'POST',

headers: { 'Content-Type': 'application/json' },

body: JSON.stringify(userData)

});

if (!response.ok) {

throw new Error('Failed to create user');

}

return response.json();

}This method creates a new user. It sends a POST request to the API endpoint with the user data in the request body. The data is converted to a JSON string. If the response is successful, it returns the newly created user data. Otherwise, it throws an error.

Now, let’s look at the updateUser function:

// userAPI.js

async updateUser(userId, userData) {

const response = await fetch(`https://api.example.com/users/${userId}`, {

method: 'PUT',

headers: { 'Content-Type': 'application/json' },

body: JSON.stringify(userData)

});

if (!response.ok) {

throw new Error('Failed to update user');

}

return response.json();

}This method updates an existing user’s information. It sends a PUT request to the API endpoint with the updated user data in the request body. If the update is successful, it returns the updated user data. If not, it throws an error.

Finally, let’s examine the deleteUser function:

// userAPI.js

async deleteUser(userId) {

const response = await fetch(`https://api.example.com/users/${userId}`, {

method: 'DELETE'

});

if (!response.ok) {

throw new Error('Failed to delete user');

}

return true;

}This method deletes a user by their ID. It sends a DELETE request to the API endpoint. If the deletion is successful, it returns true. If the response is not okay, it throws an error.

This userAPI.js file defines functions for interacting with a user API, including operations for getting, creating, updating, and deleting users.

Now, let’s write comprehensive tests for these API methods in api.test.js:

// api.test.js

import { expect, test, vi } from 'vitest';

import { userAPI } from './userAPI';

// Mock the fetch function globally

vi.mock('node-fetch');

const mockFetch = vi.fn();

global.fetch = mockFetch;

test('getUser fetches user data successfully', async () => {

const mockUser = { id: 1, name: 'John Doe' };

mockFetch.mockResolvedValueOnce({

ok: true,

json: () => Promise.resolve(mockUser)

});

const user = await userAPI.getUser(1);

expect(user).toEqual(mockUser);

expect(mockFetch).toHaveBeenCalledWith('https://api.example.com/users/1');

});This first test case focuses on the getUser function. Let’s break it down:

- In our tests, We mock the

fetchfunction to control its behavior. - We set up a mock user object that our API call should return.

- We use

mockFetch.mockResolvedValueOnce()to simulate a successful API response with our mock user data. - We call

userAPI.getUser(1)and useexpect()to verify that the returned user data matches our mock user and that thefetchfunction was called with the correctURL.

Now, let’s look at the createUser test:

// api.test.js

test('createUser sends POST request with user data', async () => {

const newUser = { name: 'Jane Doe', email: 'jane@example.com' };

const mockResponse = { id: 2, ...newUser };

mockFetch.mockResolvedValueOnce({

ok: true,

json: () => Promise.resolve(mockResponse)

});

const createdUser = await userAPI.createUser(newUser);

expect(createdUser).toEqual(mockResponse);

expect(mockFetch).toHaveBeenCalledWith(

'https://api.example.com/users',

expect.objectContaining({

method: 'POST',

headers: { 'Content-Type': 'application/json' },

body: JSON.stringify(newUser)

})

);

});In this test for the createUser function:

- We create a mock new user object and a mock response.

- We simulate a successful API response for creating a user.

- We call

userAPI.createUser(newUser)and verify that the created user matches our mock response. - We also check that the

fetchfunction was called with the correctURL, method,headers, andbody.

Let’s continue with the updateUser test:

// api.test.js

test('updateUser sends PUT request with updated data', async () => {

const userId = 1;

const updatedData = { name: 'John Updated' };

const mockResponse = { id: userId, ...updatedData };

mockFetch.mockResolvedValueOnce({

ok: true,

json: () => Promise.resolve(mockResponse)

});

const updatedUser = await userAPI.updateUser(userId, updatedData);

expect(updatedUser).toEqual(mockResponse);

expect(mockFetch).toHaveBeenCalledWith(

`https://api.example.com/users/${userId}`,

expect.objectContaining({

method: 'PUT',

headers: { 'Content-Type': 'application/json' },

body: JSON.stringify(updatedData)

})

);

});This test verifies the updateUser function. Here’s what it does:

- It sets up test data: a user

IDand updated user information. - It creates a mock response that the API would typically return.

- It configures the mock fetch function to return a successful response with the mock data.

- It calls the

updateUserfunction with the test data. - It checks if the returned data matches the expected mock response.

- Finally, it verifies that

fetchwas called with the correctURL, method (PUT),headers, andbodydata.

Now, let’s look at the deleteUser test:

// api.test.js

test('deleteUser sends DELETE request', async () => {

const userId = 1;

mockFetch.mockResolvedValueOnce({ ok: true });

const result = await userAPI.deleteUser(userId);

expect(result).toBe(true);

expect(mockFetch).toHaveBeenCalledWith(

`https://api.example.com/users/${userId}`,

expect.objectContaining({ method: 'DELETE' })

);

});This test checks the deleteUser function:

- It sets up a test user

ID. - It configures the mock fetch function to return a successful response.

- It calls the

deleteUserfunction with the test userID. - It verifies that the function returns

true, indicating a successful deletion. - Lastly, it checks if

fetchwas called with the correctURLand theDELETEmethod.

Finally, let’s look at error-handling tests:

// api.test.js

test('getUser handles error response', async () => {

mockFetch.mockResolvedValueOnce({ ok: false });

await expect(userAPI.getUser(1)).rejects.toThrow('Failed to fetch user');

});

// Additional error handling tests can be added for other API methods

test('createUser handles error response', async () => {

mockFetch.mockResolvedValueOnce({ ok: false });

await expect(userAPI.createUser({})).rejects.toThrow('Failed to create user');

});

test('updateUser handles error response', async () => {

mockFetch.mockResolvedValueOnce({ ok: false });

await expect(userAPI.updateUser(1, {})).rejects.toThrow('Failed to update user');

});

test('deleteUser handles error response', async () => {

mockFetch.mockResolvedValueOnce({ ok: false });

await expect(userAPI.deleteUser(1)).rejects.toThrow('Failed to delete user');

});These tests simulate API error responses and verify that each function throws an error with the expected message when the response is not successful.

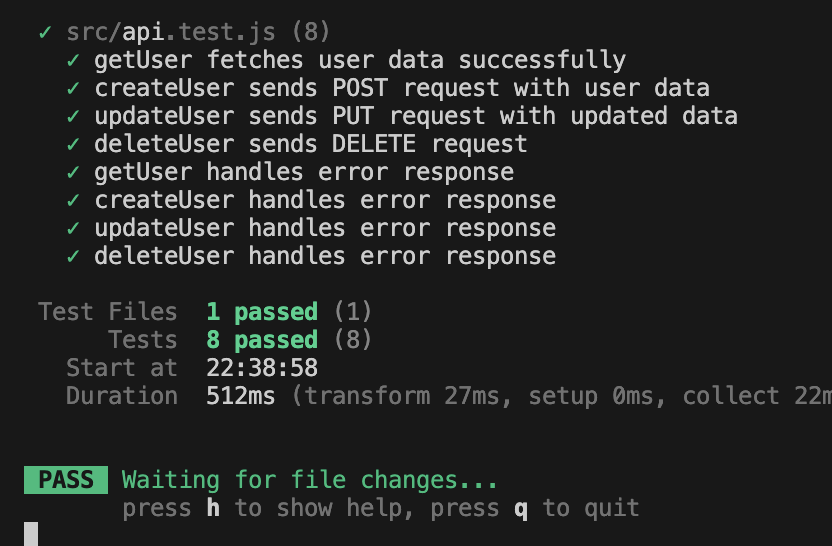

This image displays a test report for API-related tests. It shows multiple passed tests, each represented by a green checkmark. The report includes the test descriptions, such as getUser fetching user data successfully and createUser sending a POST request with user data. At the bottom, it summarizes the total number of tests run, all of which have passed, along with the total test duration.

DOM Testing with Vitest

This testing tool is quite strong in the DOM testing environment and can simulate all browser activities, making user interaction tests easier. In this article, we will go through the steps to set up and run DOM tests.

Setting Up jsdom

To simulate a browser environment in your tests, you’ll use jsdom. Configure your testing setup to use the jsdom environment by adding the following to your vite.config.js file:

npm install --save-dev jsdomTesting DOM Manipulation

Let’s create a simple function that manipulates the DOM and then test it:

// dom-utils.js

// Function to create a greeting element and add it to the DOM

export function createGreeting(name) {

// Create a new div element

const div = document.createElement('div');

// Set the text content of the div

div.textContent = `Hello, ${name}!`;

// Add a CSS class to the div

div.className = 'greeting';

// Append the div to the document body

document.body.appendChild(div);

// Return the created element

return div;

}This createGreeting function creates a new div element, sets its text content and class, and appends it to the document body.

Now, let’s write a test for this function:

// dom-utils.test.js

import { expect, test, beforeEach } from 'vitest';

import { JSDOM } from 'jsdom';

import { createGreeting } from './dom-utils';

// Set up a fresh DOM before each test

beforeEach(() => {

// Create a new JSDOM instance with a basic HTML structure

const dom = new JSDOM('<!DOCTYPE html><html><body></body></html>');

// Set the global document and window objects to use the JSDOM instance

global.document = dom.window.document;

global.window = dom.window;

});

test('createGreeting adds a greeting to the DOM', () => {

// Clear the body content before the test

document.body.innerHTML = '';

// Act: Call the createGreeting function

const element = createGreeting('Vitest');

// Assert: Check if the greeting text is correct

expect(element.textContent).toBe('Hello, Vitest!');

// Assert: Check if the correct CSS class is applied

expect(element.className).toBe('greeting');

// Assert: Check if the element is actually in the document body

expect(document.body.contains(element)).toBe(true);

});This test verifies that the createGreeting function correctly creates and appends a greeting element to the DOM.

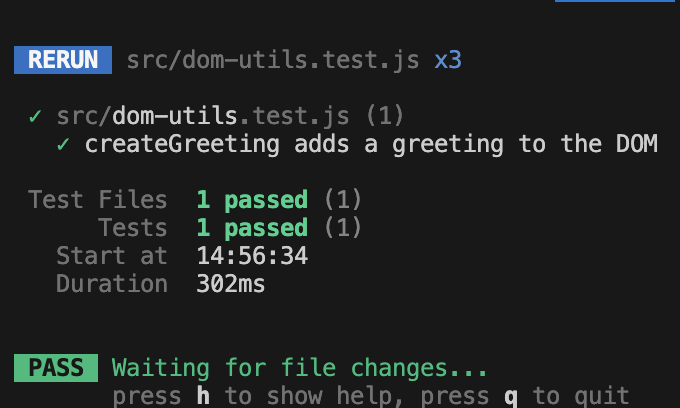

This image shows a test report for DOM-related tests. It displays a single passed test, indicated by a green checkmark. The report also includes the total number of tests run (1) and the test duration.

This image shows a test report for DOM-related tests. It displays a single passed test, indicated by a green checkmark. The report also includes the total number of tests run (1) and the test duration.

Testing Event Listeners

Let’s create a simple counter component with an event listener:

// counter.js

export function createCounter() {

const button = document.createElement('button');

button.textContent = 'Count: 0';

let count = 0;

button.addEventListener('click', () => {

count++;

button.textContent = `Count: ${count}`;

});

return button;

}This createCounter function creates a button that increments a counter when clicked.

Now, let’s test this component:

// counter.test.js

import { expect, test, beforeEach } from 'vitest';

import { JSDOM } from 'jsdom';

import { createCounter } from './counter';

beforeEach(() => {

const dom = new JSDOM('<!DOCTYPE html><html><body></body></html>');

global.document = dom.window.document;

global.window = dom.window;

});

test('counter increments when clicked', () => {

const counter = createCounter();

document.body.appendChild(counter);

expect(counter.textContent).toBe('Count: 0');

counter.click();

expect(counter.textContent).toBe('Count: 1');

counter.click();

counter.click();

expect(counter.textContent).toBe('Count: 3');

});This test verifies that the counter component correctly increments its count when clicked.

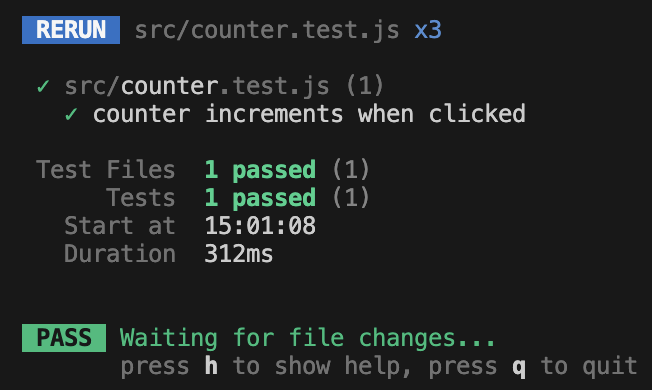

This image presents a test report for a counter component. It shows one passed test, marked with a green checkmark.The report includes the total number of tests run (1) and the time taken for the test.

This image presents a test report for a counter component. It shows one passed test, marked with a green checkmark.The report includes the total number of tests run (1) and the time taken for the test.

In-Source Testing: Keeping Tests Close to Home

The runner provides a cool concept called in-source testing, which enables you to write tests directly alongside the code they test within the same source file. This approach departs from the traditional way of keeping tests in separate files.

Benefits of In-Source Testing

In-source testing offers several benefits beyond developer convenience. First, it makes the code more maintainable by creating a clear link between its functionality and tests. This makes it easier to understand what is being tested and keeps the code and test logic together.

Second, in-source testing improves the developer experience by establishing a better workflow. Developers can write tests while developing features, promoting a Test-Driven Development (TDD) approach.

Finally, in-source testing can help tidy up and eliminate project structures by removing the need for separate test files, which is particularly useful for small projects or isolated components.

Enabling In-Source Testing

In-source testing is not enabled by the runner by default. You can activate it by adding a special comment block at the end of your source file:

// Your component code

// Test block for in-source testing

import { expect, test } from 'vitest';

test('your test description', () => {

// Your test assertions here

});This code snippet demonstrates in-source testing with the runner. Tests alongside the component code are easier to organize and maintain.

Modern test runners often offer in-source testing, allowing tests to live beside the code they verify. This method benefits developers by making tests easier to read and maintain, especially in smaller, self-contained components. The proximity of tests to the code makes it simpler to see how a component functions and is tested.

Moreover, in-source testing allows you to test private variables or functions within the same scope. This simplifies test logic since you no longer need external mechanisms to interact with private members of the component. Lastly, in-source testing facilitates Test-Driven Development (TDD), enabling you to write features and tests iteratively, keeping the tests aligned with specific pieces of code.

Visualizing Tests with a GUI

This runner provides an optional GUI dashboard via the @vitest/ui package. While not essential, it can improve your experience by offering an interface for managing and reviewing test results.

Installation

To use the GUI dashboard, add it to your project as a development dependency:

npm install --save-dev @vitest/uiUsing the GUI Dashboard

Once installed, the runner with the-—ui flag:

npx vitest --uiThis will launch the GUI dashboard in your web browser. Here’s what you can expect:

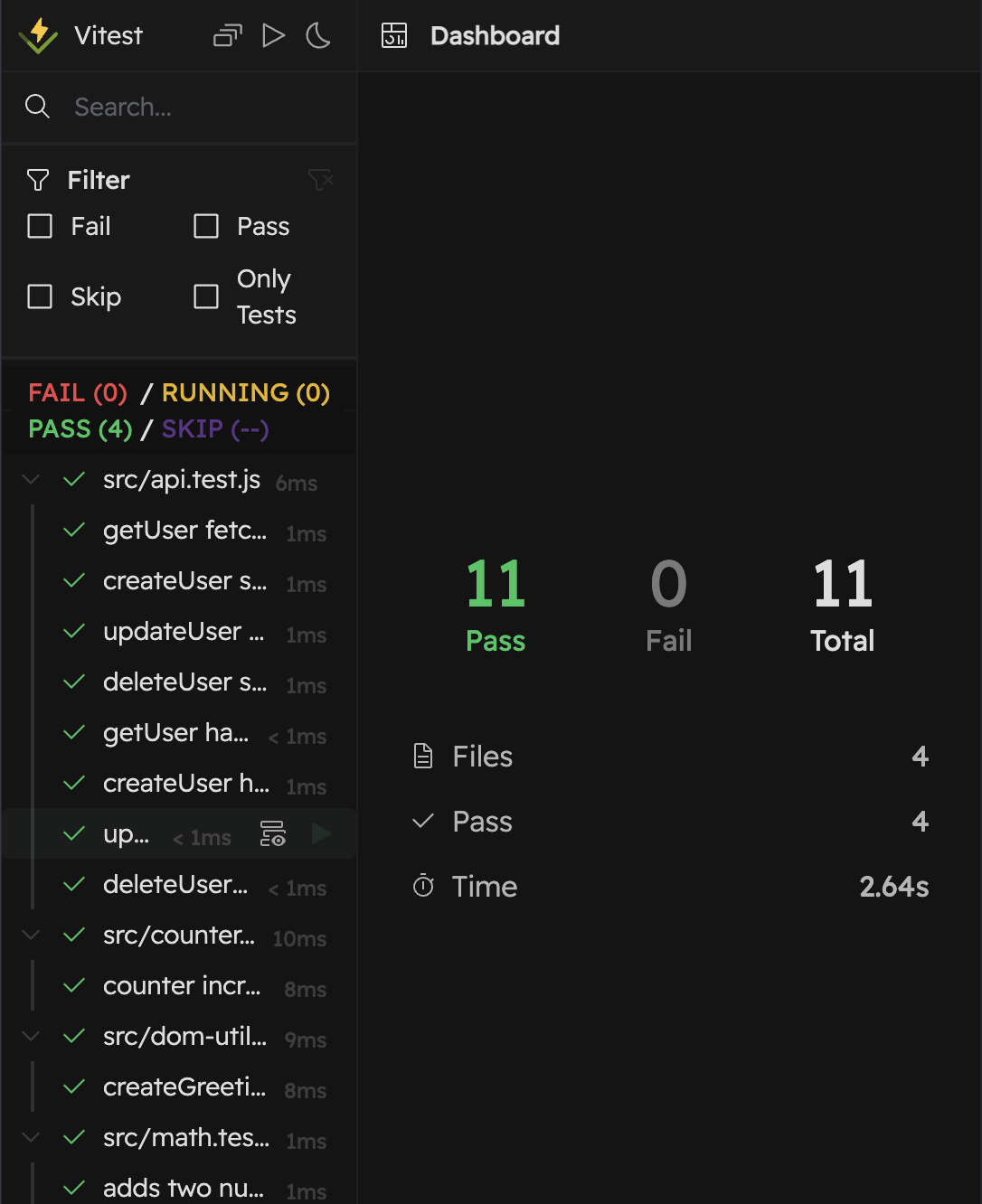

This GUI dashboard displays results for all the tests covered in this article. On the left, you can see a list of test files, including our basic function tests, API tests,

This GUI dashboard displays results for all the tests covered in this article. On the left, you can see a list of test files, including our basic function tests, API tests, DOM manipulation tests, and the counter test. Each test shows its pass/fail status and execution time. The right panel provides more detailed information about selected tests, including any errors or console output. This comprehensive view allows developers to quickly assess the health of their entire test suite and drill down into specific test results as needed.

Benefits of GUI Support

The runner dashboard is more than just an add-on—it’s a gateway to effective testing practices. The dashboard offers a better test overview, visually presenting tests so you can quickly identify failed or skipped ones.

Detailed test results and stack traces help with fast debugging, making it easier to locate issues in failing tests. The dashboard also provides efficient test management tools, such as filtering and search options, which are useful for navigating large test lists. Finally, the GUI supports collaborative testing efforts, valuable during team discussions and test result demonstrations.

Taming Flaky Tests: Retries & More

Even good tests can occasionally break, causing frustration or a loss of trust. The framework provides a mechanism to handle these flaky tests by automatically retrying them upon failure.

Configuring Retries

By default, the framework doesn’t retry failed tests. However, you can configure retries through the retry option in the test function:

test('flaky test', { retry: 3 }, () => {

// Your test logic here

});Here’s an example of a flaky test with the retry option set to 3. If the test fails initially, the framework will rerun it up to 3 times before marking it as a final failure.

Identifying and Managing Flaky Tests

Here are some tips for troubleshooting flaky tests:

- Analyze Failure Reasons: Review failure messages and logs to understand why a test fails. Network issues, timing problems, or external dependencies can cause flakiness.

- Isolate Flaky Logic: Refactor or rewrite the flaky logic to reduce external dependencies and focus on the core functionality being tested.

- Mock External Dependencies: Consider mocking external resources like APIs or databases to isolate your tests and avoid flaky failures due to those externals.

- Flaky Test Markers (Optional): This runner doesn’t have built-in flaky test markers, but you can implement a custom solution using conditional logic within the test to identify and report them.

Conclusion

This testing framework, seamlessly integrated with Vite, is a powerful and flexible solution for modern web development. It is capable of handling various types: API calls, error handling, DOM manipulation, and event testing. This tool is used today by developers to ensure that their applications are always strong, stable, and of good quality.

This is why it integrates nicely with the Vite rapid workflow, making testing easy to get started and friendly with minimal configuration. For testing both front-end and back-end features, this framework is a great choice as it can create browser environments using jsdom, making it a complete solution for testing in modern web development. This approach makes unit testing a normal part of the development process, contributing to overall quality and reliability in your projects.

Gain control over your UX

See how users are using your site as if you were sitting next to them, learn and iterate faster with OpenReplay. — the open-source session replay tool for developers. Self-host it in minutes, and have complete control over your customer data. Check our GitHub repo and join the thousands of developers in our community.